Chapter 5 A useful toolbox

5.1 Phase estimation

In this section we report the theorems for the subroutines that are often used to create new quantum algorithms. Often, we report multiple formulations, so that the working quantum algorithm researcher can pick the best version for them.

Theorem 5.1 (Phase estimation (Kitaev 1996)) Let \(U\) be a unitary operator with eigenvectors \(|v_j\rangle\) and eigenvalues \(e^{i \theta_j}\) for \(\theta_j \in [-\pi, \pi]\), i.e. we have \(U|v_j\rangle = e^{i \theta_j}|v_j\rangle\) for \(j \in [n]\). For a precision parameter \(\epsilon > 0\), there exists a quantum algorithm that runs in time \(O(\frac{T(U)\log(n)}{\epsilon}))\) and with probability \(1 - 1/poly(n)\) maps a state \(|\phi_i\rangle = \sum_{j \in [n]} \alpha_j|v_j\rangle\) to the state \(\sum_{j \in [n]} \alpha_j |v_j\rangle|\bar{\theta_j}\rangle\) such that \(|\bar{\theta}_j - \theta_j| < \epsilon\) for all \(j \in [n]\).

Theorem 5.2 (Error and probability of failure of phase estimation (Nielsen and Chuang 2002) Section 5.2 and (Nannicini 2019)) Let \(0.a=a_1, \dots a_q\) be the output of phase estimation when applied to an eigenstate with phase \(\phi\). If we use \(q+\lceil\log(2+\frac{1}{2\delta})\rceil\) qubits of precision, the first \(q\) bits of \(a\) will be accurate with probability at least \(1-\delta\), i.e. \[Pr[|\phi - \sum_{j=1}^q a_j2^{-j}| \leq 2^{-q}] \geq 1-\delta\]

While the standard implementation of phase estimation is based on the quantum Fourier transform (QFT) circuit (Nielsen and Chuang 2002), there have been various improvements (Ahmadi and Chiang 2010) which try to soften the dependence on the QFT circuit, while retaining the accuracy guarantees offered by the QFT in estimating the angles \(\theta_j\).

Note that the same algorithm described in theorem 5.1 can be made ‘’consistent’’, in the sense of (Ta-Shma 2013) and (Andris Ambainis 2012). While in the original formulation of phase estimation two different runs might return different estimates for \(\overline{\theta}_j\), with a consistent phase estimation this estimate is fixed, with high probability. This means that the error between two different runs of phase estimation is almost deterministic.

5.2 Grover’s algorithm, amplitude games

Theorem 5.3 (Grover's algorithm) Let \(N=2^n\) for \(n>0\). Given quantum oracle access \(O_x: |i\rangle\mapsto|i\rangle|x_i\rangle\) to a vector \(x=\{0,1\}^N\) where only one element of \(x\) is 1, there is a quantum algorithm that finds the index of that element using \(O_x\) only \(O(\sqrt{N})\) times.

This problem can be generalized to the case where there are multiple elements “marked” as good solutions. If we know the number of solutions in advance, the algorithm can be modified such that it fails with probability 0.

5.2.1 Amplitude estimation

Amplitude amplification and amplitude estimation are two of the workhorses of quantum algorithms.

Theorem 5.4 (Amplitude estimation, (Brassard et al. 2002)) Given a quantum algorithm \[A:|0\rangle \to \sqrt{p}|y,1\rangle + \sqrt{1-p}|G,0\rangle\] where \(|G\rangle\) is some garbage state, then the amplitude estimation algorithm, using exactly \(P\) iterations of the algorithm \(A\) for any positive integer \(P\), outputs \(\tilde{p}\) \((0 \le \tilde p \le 1)\) such that \[ |\tilde{p}-p|\le 2\pi \frac{\sqrt{p(1-p)}}{P}+\left(\frac{\pi}{P}\right)^2 \] with probability at least \(8/\pi^2\). If \(p=0\) then \(\tilde{p}=0\) with certainty, and if \(p=1\) and \(P\) is even, then \(\tilde{p}=1\) with certainty.

Theorem 5.5 (Amplitude estimation (Brassard et al. 2002), formulation of (Montanaro 2015)) There is a quantum algorithm called amplitude estimation which takes as input one copy of a quantum state \(|\psi\rangle\), a unitary transformation \(U=2|\psi\rangle\langle\psi|-I\), a unitary transformation \(V=I-2P\) for some projector \(P\), and an integer \(t\). The algorithm outputs \(\tilde{a}\), an estimate of \(a=\langle\psi|P|\psi\rangle\), such that: \[|\tilde{a}-a| \leq 2\pi\frac{\sqrt{a(1-a)}}{t} + \frac{\pi^2}{t^2}\] with probability at least \(8/\pi^2\), using \(U\) and \(V\) \(t\) times each. If \(a=0\) then \(\tilde{a}=0\) with certainty, and if \(a=1\) and \(t\) is even, then \(\tilde{a}=1\) with certainty.

In the original version of the Grover’s algorithm we assume to know the number of marked elements, and therefore we can derive the correct number of iterations. Later on, a fixed-point version of amplitude amplification was proposed (Brassard et al. 2002) (L. K. Grover 2005), which was then optimized in (Yoder, Low, and Chuang 2014). These versions do not require to know the number of iterations in advance. These results fundamentally leverage the trick that we reported in Proposition 5.1.

Let’s see in practice how to use Theorem 5.4. Suppose that we want to estimate \(a\) with relative error \(\epsilon\). What is the number of times that we have to use the two unitaries? Let’s check that it suffices to take \(t=\frac{2 \pi }{\epsilon \sqrt{a}}\), as

\[\begin{align} \left| a - \widetilde{a} \right| \leq & \frac{2 \pi\sqrt{a} \sqrt{a(1-a)}\epsilon}{2\pi} + \frac{\pi^2 \epsilon^2a}{4 \pi^2} = \epsilon a \sqrt{1-a} + \frac{\epsilon^2 a}{4} \nonumber \\ \leq & \epsilon a (1 - \frac{a}{2}) + \frac{\epsilon^2 a}{4} = \epsilon a\left( 1- \frac{a}{2}+ \frac{\epsilon}{4}\right) \leq \epsilon a. \end{align}\]

In the previous equation we used the Taylor expansion of \(\sqrt{1-x}\) to the second order, i.e. \(\sqrt{1-x} \leq 1 - x/2\), and the fact that \(\epsilon,a < 1\) in the last inequality. The asymptotic runtime of the algorithm is thus \(O(\frac{1}{\epsilon \sqrt{a}})\).

What if we want to have an absolute error now? We have some of options. The simplest one is to note that a relative error of a quantity between \(0\) and \(1\) automatically translates in an absolute error. But this might not be the most elegant thing to do: since an absolute error for a quantity between \(0\) and \(1\) is “worse” than the relative error on the same quantity, we might want to save some resources, i.e. decrease the number of calls to the oracles. Let’s set \(t=\frac{2\pi}{\epsilon}\) and observe that

\[\begin{align} \left| a - \widetilde{a} \right| \leq & \frac{2 \pi \sqrt{a(1-a)}\epsilon}{2\pi} + \frac{\pi^2 \epsilon^2}{4 \pi^2} = \epsilon \sqrt{a}\sqrt{1-a} + \frac{\epsilon^2}{4} \nonumber \\ \leq & \epsilon \sqrt{a}(1 - \frac{a}{2}) + \frac{\epsilon^2}{4} = \epsilon\left(\sqrt{a}(1- \frac{a}{2})+ \frac{\epsilon}{4}\right) \leq \epsilon. \end{align}\]

Here, in addition to the tricks used in the relative error, we also used that \(\sqrt{a}\leq 1\).

Exercise 5.1 ((Hard)) Another idea is to realize that we could run the algorithm returning the relative error as a black box, and set the error to \(\epsilon'=\epsilon/a\). In this way we might estimate a relative error \(\epsilon'a=\epsilon\), with the hope to save some resources. What is the impact of this operation in the runtime of the algorithm? It’s simple to see that the runtime becomes \(O(\frac{1}{\frac{\epsilon}{a}\sqrt{a}}) = O(\frac{\sqrt{a}}{\epsilon})\). Can we check if setting \(t=\frac{2\pi \sqrt{a}}{\epsilon}\) can give an absolute error in \(O(\frac{\sqrt{a}}{\epsilon})\) runtime? What is difficult about it?

The solution to the previous exercise consist in adding a term \(\frac{1}{\sqrt{\epsilon}}\) in the number of iterations \(t\). If we set \(t = \lceil 2\pi\left(\frac{2\sqrt{a}}{\epsilon}\right) + \frac{1}{\sqrt{\epsilon}} \rceil\) we can get an absolute error.

Perhaps a simpler formulation, which hides the complexity of the low-level implementation of the algorithm, and is thus more suitable to be used in quantum algorithms for machine learning is the following:

Lemma 5.1 (Amplitude amplification and estimation (Kerenidis and Prakash 2020) ) If there is a unitary operator \(U\) such that \(U|0\rangle^{l}= |\phi\rangle = \sin(\theta) |x, 0\rangle + \cos(\theta) |G, 0^\bot\rangle\) then \(\sin^{2}(\theta)\) can be estimated to multiplicative error \(\eta\) in time \(O(\frac{T(U)}{\eta \sin(\theta)})\) and \(|x\rangle\) can be generated in expected time \(O(\frac{T(U)}{\sin (\theta)})\) where \(T(U)\) is the time to implement \(U\).

Recently, various researches worked on improvements of amplitude estimation by getting rid of the part of the original algorithm that performed the phase estimation (i.e. the Quantum Fourier Transform (Nielsen and Chuang 2002)) (Grinko et al. 2019), (Aaronson and Rall 2020). As the QFT is not considered to be a NISQ subroutine, these results bring more hope to apply these algorithms in useful scenarios in the first quantum computers.

FIrst proposed MLQAE.

QIP2023 with a particular choice of probability, while before we had results “on average”.

(ref:ambainis2012variable) (A. Ambainis 2012) (ref:chakraborty2022regularized) (Chakraborty, Morolia, and Peduri 2022)

Theorem 5.6 (Variable Time Search (ref:ambainis2012variable)) Let \(\mathcal A_1, \ldots, \mathcal A_n\) be quantum algorithms that return true or false and run in unknown times \(T_1, \ldots, T_n\), respectively. Suppose that each \(\mathcal A_i\) outputs the correct answer with probability at least \(2/3\). Then there exists a quantum algorithm with success probability at least \(2/3\) that checks whether at least one of \(\mathcal A_i\) returns true and runs in time \[\widetilde O\left(\sqrt{T_1^2+\ldots+T_n^2}\right).\]

Definition 5.1 (Variabile-stopping-time algorithm (ref:ambainis2012variable) (ref:chakraborty2022regularized)) A quantum algorithms \(\mathcal{A}\) acting on \(\mathcal{H}\) that can be written as \(m\) quantum sub-algorithms \(\mathcal{A} = \mathcal{A}_m\mathcal{A}_{m-1}\dots \mathcal{A}_1\) is called a variable stopping time algorithm if \(\mathcal{H}=\mathcal{H}_C \otimes \mathcal{H}_{A}\), where \(\mathcal{H}_C \otimes_{i=1}^m \mathcal{H}_{C_i}\) with \(\mathcal{H}_{C_i} = Span(|0\rangle, |1\rangle)\) and each unitary \(\mathcal{A}_j\) acts on \(\mathcal{H}_{C_i} \otimes \mathcal{H}_A\) controlled on the first \(j-1\) qubits \(|0\rangle^{\otimes j-1} \in \otimes_{i=1}^{j-1} \mathcal{H}_{C_i}\) being in the all zero state.

5.2.3 Example: estimating average and variance of a function

Albeit the ideas treated in this post are somehow well-digested in the mind of many quantum algorithms developers, this example is very useful to get a practical understanding of amplitude estimation. Notably, much more precise and involved discussion around this topic can be found in chapter 8.

Suppose we have a random variable \(X\) described by a certain probability distribution over \(N\) different outcomes, and a function \(f: \{0,\cdots N\} \to [0,1]\) defined over this distribution. How can we use quantum computers to evaluate some properties of \(f\) such as expected value and variance faster than classical computers?

Let’s start by translating into the quantum realm these two mathematical objects. The probability distribution is (surprise surprise) represented in our quantum computer by a quantum state over \(n=\lceil \log N \rceil\) qubits. \[|\psi\rangle = \sum_{i=0}^{N-1} \sqrt{p_i} |i\rangle \] where the probability of measuring the state \(|i\rangle\) is \(p_i,\) for \(p_i \in [0, 1]\). Basically, each bases of the Hilbert space represent an outcome of the random variable.

The quantization of the function \(f\) is represented by a linear operator \(F\) acting on a new ancilla qubit (here on the right) as:

\[ F: |i\rangle|0\rangle \to |i\rangle\left(\sqrt{1-f(i)}|0\rangle + \sqrt{f(i)}|1\rangle\right) \]

If we apply \(F\) with \(|\psi\rangle\) as input state we get:

\[ \sum_{i=0}^{N-1} \sqrt{1-f(i)}\sqrt{p_i}|i\rangle|0\rangle + \sum_{i=0}^{N-1} \sqrt{f(i)}\sqrt{p_i}|i\rangle|1\rangle \]

Observe that the probability of measuring \(|1\rangle\) in the ancilla qubit is \(\sum_{i=0}^{N-1}p_if(i)\), which is \(E[f(X)]\). By sampling the ancilla qubit we won’t get any speedup compared to a classical randomized algorithm with oracle access to the function, but applying amplitude estimation (Brassard et al. 2002) to the ancilla qubit on the right, we can get an estimate of \(E[F(X)]\) with precision \(\epsilon\), quadratically faster than a classical computer: in only \(O(\frac{1}{\epsilon})\) queries to \(f\).

Finally, observe that:

- if we chose \(f(i)=\frac{i}{N-1}\) we are able to estimate \(E[\frac{X}{N-1}]\) (which, since we know \(N\) gives us an estimate of the expected value \(E[X]\))

- if we chose \(f(i)=\frac{i^2}{(N-1)^2}\) instead, we can estimate \(E[X^2]\) and using this along with the previous choice of \(f\) we can estimate the variance of \(X\): \(E[X^2] - E[X]^2\).

Exercise 5.2 Can you prove the previous two points?

We will see in Chapter 8 why in many real-world cases this is not the best way of estimating \(\mathbb{E}(f(X))\).

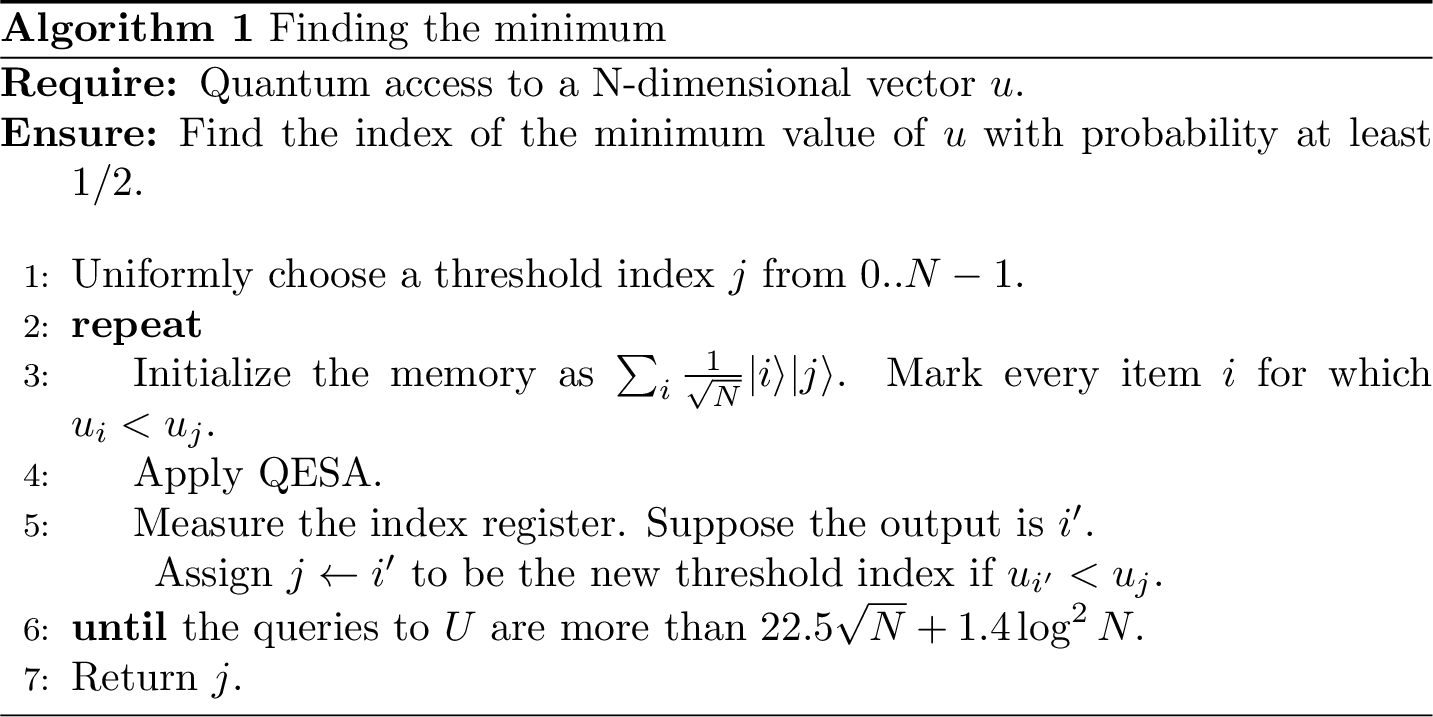

5.3 Finding the minimum

We now want to show an algorithm that finds the minimum among \(N\) unsorted values \(u_{j\in[N]}\). As the Grover’s algorithm, we work in the oracle model, so we assume to have quantum access to a the vector \(u\). Without loss of generality we can take \(N=2^n\), but if the length of our list is not a power of 2, we can just pad the rest of the elements in the list with zeroes. The statement of the theorem is the following.

Lemma 5.2 (Quantum minimum finding (Durr and Hoyer 1996)) Given quantum access to a vector \(u \in [0,1]^N\) via the operation \(|j\rangle |\bar 0\rangle \to |j\rangle | u_j\rangle\) on \(\mathcal{O}\left( \log N \right)\) qubits, where \(u_j\) is encoded to additive accuracy \(\mathcal{O}\left( 1/N \right)\). Then, we can find the minimum \(u_{\min} = \min_{j\in[N]} u_j\) with success probability \(1-\delta\) with \(\mathcal{O}\left( \sqrt N \log \left (\frac{1}{\delta}\right) \right)\) queries and \(\widetilde{\mathcal{O}}\left( \sqrt N \log \left( \frac{1}{\delta}\right ) \right)\) quantum gates.

Another formulation is the following:

Theorem 5.7 (Quantum Minimum Finding (Durr and Hoyer 1996) formulation of (ambainis2019quantum)) Let \(a_1, \ldots, a_n\) be integers, accessed by a procedure \(\mathcal P\). There exists a quantum algorithm that finds \(\min_{i=1}^n \{a_i\}\) with success probability at least \(2/3\) using \(O(\sqrt n)\) applications of \(\mathcal P\).

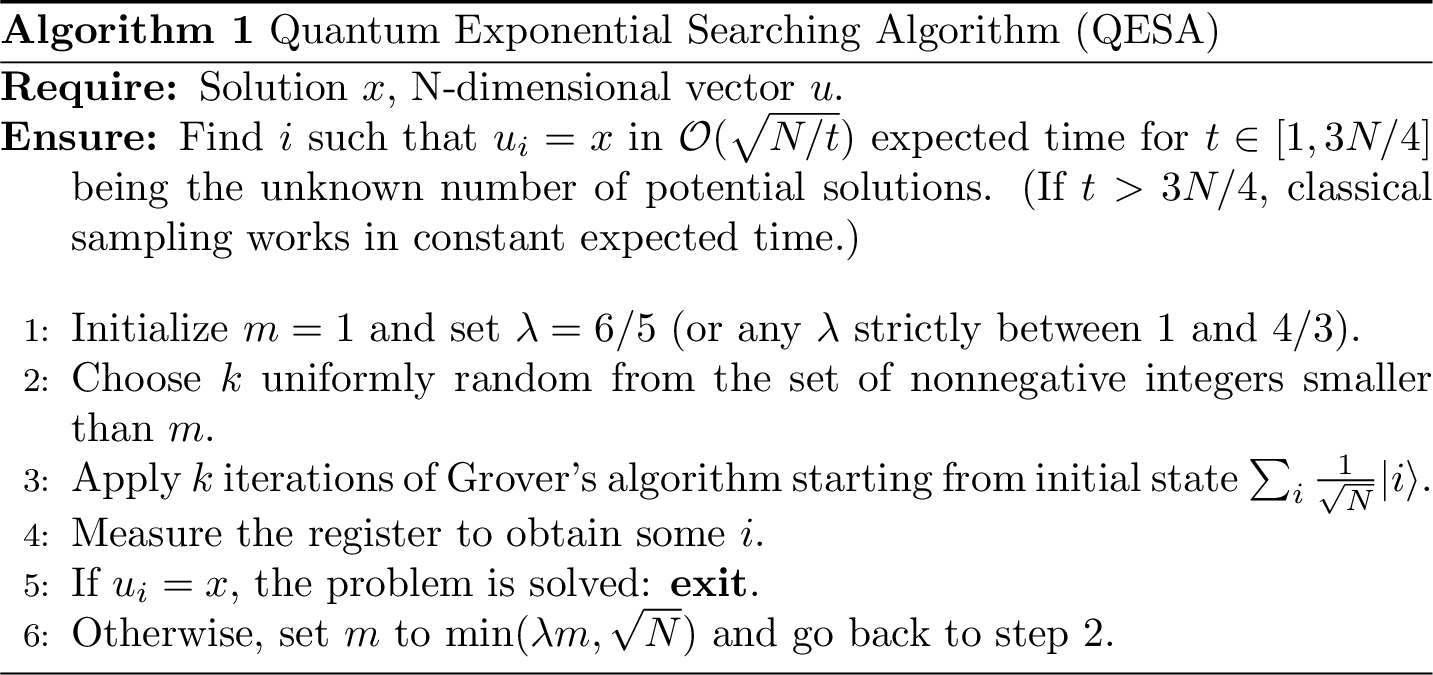

This algorithm utilizes a subroutine called quantum exponential searching algorithm (QESA), which is again composed of amplitude amplification (Grover) and amplitude estimation. For simplicity, we assume those values are distinct. The general idea is marking indices of values below a chosen threshold, returning and picking one of them as the new threshold with equal probability. After some iterations, it is expected to yield the lowest value. Note that the step of marking lower values is taken as an oracle with gate complexity of \(O(1)\). We first present to algorithm and keep the explanation for later.

Figure 5.1: Finding the minimum

Figure 5.2: Quantum Exponential Searching Algorithm

In the basic Grover’s algorithm with one solution, \(m = CI\left(\frac{\pi}{4}\sqrt{N}\right)\) iterations give the true output with error probability at most \(1/N\) for \(N \gg 1\), where \(CI(x)\) is the closest integer to \(x\). The result can be generalized in the case of \(t \ll N\) solutions that the error rate is reduced to at most \(t/N\) after exactly \(m = CI\left(\frac{\pi-2\arcsin{\sqrt{t/N}}}{4\arcsin{\sqrt{t/N}}}\right) = \left \lfloor \frac{\pi}{4\arcsin{\sqrt{t/N}}} \right \rfloor\) iterations (and thus oracle calls), with an upper bound \(m \leq \frac{\pi}{4}\sqrt{\frac{N}{t}}\). However, a problem arises when we have to amplify amplitudes corresponding to values below the threshold. In practice, the number of candidates is unknown and varies for each threshold. QESA is a generalized algorithm to find a solution for unknown \(t\) with probability at least \(1/4\) in \(O(\sqrt{N/t})\), which is motivated by the following observation.

Proposition 5.1 (Quantum Exponential Searching Algorithm (Boyer et al. 1998)) Let \(t\) be the (unknown) number of solutions and let \(\theta\) be such that \(\sin^2{\theta} = t/N\). Let \(m\) be an arbitrary positive integer. Let \(k\) be an integer chosen at random according to the uniform distribution between \(0\) and \(m − 1\). If we observe the register after applying \(k\) iterations of Grover’s algorithm starting from the initial state \(\sum_{i}\frac{1}{\sqrt{N}}|i\rangle\), the probability of obtaining a solution is exactly \(P_m = \frac{1}{2} - \frac{\sin{(4m\theta)}}{4m\sin{(2\theta)}}\). In particular, \(P_m \geq 1/4\) when \(m \geq 1/\sin{(2\theta)}\).

As QESA is expected to be done in \(O(\sqrt{N/t})\) queries, one can deduce that the expected number of queries for the minimum-finding algorithm with success probability at least \(1/2\) is \(O(\sqrt{N})\). Repeating the algorithm \(c\) times increases the success probability to \(1-1/2^c\). In terms of quantum gates, the Grover part and the initialization part use \(O(\sqrt{N})\) and \(O(\log{N})\) respectively.

5.4 Quantum linear algebra

A central tool for quantum algorithm in machine learning are the subroutines for performing quantum linear algebraic routines on a quantum computer. From the first work of (Harrow, Hassidim, and Lloyd 2009) (known in the literature as HHL algorithm) that proposed a quantum algorithm for matrix inversion, a lot of progress has been made in improving quantum algorithms for these problems. In this section, we briefly recall some of the results, and we conclude by citing the state-of-the art techniques for performing not only matrix inversion and matrix multiplication, but also for applying a certain class of functions to the singular values of a matrix. The HHL algorithm - under suitable assumptions - was able to create a quantum state proportional to the solution to a sparse linear system of equations \(Ax=b\) using only a number of queries to the oracle that was polylogarithmic in the size of the matrix \(A\). The assumption are the following: \(A\) must be symmetric and sparse (and we have quantum query access to \(A\), and quantum sample access to \(|b\rangle\), as we defined more precisely in 3 ). The runtime of the first quantum algorithm for this problem was \(\widetilde{O}\left(s^2\kappa(A)^2/\epsilon \right)\), where \(s\) is the maximum value of non-zero entries per rows.

5.4.1 Singular value estimation

A notable result after HHL was the ability to perform a quantum version of the singular value decomposition. You can think of this result as a generalized phase estimation, i.e. a phase estimation that works on non-unitary matrices. It was first proposed in (Kerenidis and Prakash 2017), and later improved in (Kerenidis and Prakash 2020) and (Chakraborty, Gilyén, and Jeffery 2019). This idea is detailed in the following theorem.

Theorem 5.8 (Singular Value Estimation (Kerenidis and Prakash 2020)) Let \(M \in \mathbb{R}^{n \times d}\) be a matrix with singular value decomposition \(M =\sum_{i} \sigma_i u_i v_i^T\) for which we have quantum access. Let \(\varepsilon > 0\) the precision parameter. There is an algorithm with running time \(\tilde{O}(\mu(M)/\varepsilon)\) that performs the mapping \(\sum_{i} \alpha_i |v_i\rangle \to \sum_{i}\alpha_i |v_i\rangle |\tilde{\sigma}_i\rangle\), where \(| \tilde{\sigma_i} - \sigma_i | \leq \varepsilon\) for all \(i\) with probability at least \(1-1/poly(n)\).

Recall that quantum access to a matrix is defined in theorem ??, and the parameter \(\mu\) is defined in definition 3.14. The relevance of theorem 5.8 for quantum machine learning is the following: if we are able to estimate the singular values of a matrix, then we can perform a conditional rotation controlled by these singular values and hence perform a variety of linear algebraic operations, including matrix inversion, matrix multiplication or projection onto a subspace. Based on this result, quantum linear algebra was done using the theorem stated below.

Theorem 5.9 (Old method for quantum linear algebra) Let \(M := \sum_{i} \sigma_iu_iv_i^T \in \mathbb{R}^{d \times d}\) such that \(\left\lVert M\right\rVert_2 = 1\), and a vector \(x \in \mathbb{R}^d\) for which we have quantum access. There exist quantum algorithms that with probability at least \(1-1/poly(d)\) returns

- a state \(|z\rangle\) such that \(| |z\rangle - |Mx\rangle| \leq \epsilon\) in time \(\tilde{O}(\kappa^2(M)\mu(M)/\epsilon)\)

- a state \(|z\rangle\) such that \(||z\rangle - |M^{-1}x\rangle| \leq \epsilon\) in time \(\tilde{O}(\kappa^2(M)\mu(M)/\epsilon)\)

- a state \(|M_{\leq \theta, \delta}^+M_{\leq \theta, \delta}x\rangle\) in time \(\tilde{O}(\frac{ \mu(M) \left\lVert x\right\rVert}{\delta \theta \left\lVert M^{+}_{\leq \theta, \delta}M_{\leq \theta, \delta}x\right\rVert})\)

One can also get estimates of the norms with multiplicative error \(\eta\) by increasing the running time by a factor \(1/\eta\).

Recall that we denote as \(V_{\geq \tau}\) the matrix \(\sum_{i=0}^\ell \sigma_i u_i v_i^T\) where \(\sigma_\ell\) is the smallest singular value which is greater than \(\tau\). For a matrix \(M\) and a vector \(x\), we define as \(M^{+}_{\leq \theta, \delta}M_{\leq \theta, \delta} x\) the projection of \(x\) onto the space spanned by the singular vectors of \(M\) whose corresponding singular values are smaller than \(\theta\), and some subset of singular vectors whose corresponding singular values are in the interval \([\theta, (1+\delta)\theta]\).

For a symmetric matrix \(M \in \mathbb{R}^{d\times d}\) with spectral norm \(\|M\|=1\) for which we have quantum access, the running time of these algorithms depends on the condition number \(\kappa(M)\) of the matrix, that can be replaced by \(\kappa_\tau(M)\), a condition threshold where we keep only the singular values bigger than \(\tau\), and the parameter \(\mu(M)\), a matrix dependent parameter defined in definition 3.14. The running time also depends logarithmically on the relative error \(\epsilon\) of the final outcome state. Recall that these linear algebra procedures above can also be applied to any rectangular matrix \(V \in \mathbb{R}^{n \times d}\) by considering instead the symmetric matrix

\[ \overline{V} = \left ( \begin{matrix} 0 &V \\ V^{T} &0 \\ \end{matrix} \right ).\]

5.4.2 Linear combination of unitaries

We continue our journey in quantum linear algebra by discussing the state-of-the-art technique beneath quantum linear algebra.

The research of quantum algorithms for machine learning has always used techniques developed in other areas of quantum algorithms. Among the many, we cite quantum algorithms for Hamiltonian simulation and quantum random walks. In fact, using quantum random walks, it is possible to decrease the dependence on the error parameter, from polynomial to \(\text{polylog}(1/\epsilon)\) (Childs and Wiebe 2012). Stemming from the research in Hamiltonian simulation (Berry, Childs, and Kothari 2015), (Low and Chuang 2019), (Berry et al. 2015), (Low and Chuang 2017),(Subramanian, Brierley, and Jozsa 2019), these techniques have been further optimized, pushing them to the limit of almost optimal time and query complexity. Significant progress in the direction of quantum algorithms for linear algebra was the so-called LCU, or linear combination of unitaries (Childs and Wiebe 2012), which again was developed in the context of the Hamiltonian simulation problem.

Lemma 5.3 (Linear combination of unitaries (Childs, Kothari, and Somma 2015)) Let \(M = \sum_{i} \alpha_i U_i\) be a linear combination of unitaries \(U_i\) with \(\alpha_i >0\) for all \(i\). Let \(V\) be any operator that satisfies \(V|0^{\otimes m}\rangle := \frac{1}{\sqrt{\alpha}}\sum_i \sqrt{\alpha_i}|i\rangle\), where \(\alpha := \sum_i \alpha_i\). Then \(W := V^{\dagger}UV\) satisfies \[\begin{equation} W|0^{\otimes m}\rangle|\psi\rangle =\frac{1}{\alpha}|0^{\otimes m}\rangle M|\psi\rangle + |\Psi^\perp\rangle \end{equation}\] for all states \(|\psi\rangle\), where \(U := \sum_i |i\rangle\langle i| \otimes U_i\) and \((|0^{\otimes m}\rangle\langle 0^{\otimes m}| \otimes I)|\Psi^\perp\rangle=0\).

5.4.3 Singular value transformation

The research in quantum linear algebra culminated with the work of (Chakraborty, Gilyén, and Jeffery 2019), (Gilyén et al. 2019) with some improvements in (Chakraborty, Morolia, and Peduri 2022). We now briefly go through the machinery behind these results, as it will be used extensively this work. Before that, we recall the definition of block-encoding from Chapter 3.

Definition 5.2 (Block encoding of a matrix) Let \(A\in \mathbb{C}^{2^s \times 2^s}\). We say that a unitary \(U \in \mathbb{C}^{(s+a)\times(s+a)}\) is a (\(\alpha, a, \epsilon)\) block encoding of \(A\) if: \[\left\lVert A - \alpha (\langle 0|^a \otimes I )U( |0\rangle^a \otimes I) \right\rVert \leq \epsilon\]

We will see that having quantum access to a matrix \(A\in\mathbb{C}^{2^w \times 2^w}\), as described in the setting of theorem 3.8, it is possible to implement a \((\mu(A), w+2, \text{polylog}(\epsilon))\) block-encoding of \(A\) . Given matrix \(U\) which is a \((\alpha, a, \delta)\) block encoding of \(A\), and a matrix \(V\) which is a \((\beta, b, \epsilon)\) block encoding of \(B\), it is simple to obtain a \((\alpha\beta, a+b, \alpha\epsilon+\beta\delta)\) block encoding of \(AB\).

For practical purposes, having a block encoding of a matrix \(A\), allows one to manipulate its spectra using polynomial approximation of analytic functions. In the following theorem, the notation \(P_{\Re}(A)\) means that we apply the polynomial \(P\) to the singular values of the matrix \(A\), i.e. \(P_{\Re}(A) = \sum_i^r P(\sigma_i)u_iv_i^T\).

Theorem 5.10 (Polynomial eigenvalue transformation of arbitrary parity (Gilyén et al. 2019)) Suppose that \(U\) is an \((\alpha,a,\epsilon)\)-block encoding of the Hermitian matrix \(A\). If \(\delta\geq 0\) and \(P_{\Re}\in\mathbb{R}[x]\) is a degree-\(d\) polynomial satisfying that:

- for all \(x\in[-1,1]\), \(| P_{\Re}(x)|\leq \frac{1}{2}\).

Then there is a quantum circuit \(\tilde{U}\), which is an \((1,a+2,4d\sqrt{\epsilon/\alpha}+\delta)\)-encoding of \(P_{\mathcal{R}}(A/\alpha)\), and consists of \(d\) applications of \(U\) and \(U^\dagger\) gates, a single application of controlled-\(U\) and \(O((a+1)d)\) other one- and two-qubit gates. Moreover we can compute a description of such a circuit with a classical computer in time \(O(\text{poly}d,\log(1/\delta))\).

We will discuss in Section 5.4.4 how to use these subroutines for solving the linear system problems. You can find in Appendix @ref(#polyapprox-1overx) the polynomial approximation that was originally used in (Childs, Kothari, and Somma 2015) to get what almost-tight gate complexity for this problem. This result has been improved in (Gribling, Kerenidis, and Szilágyi 2021), leading to a polynomial approximation allowing several orders of magnitude faster algorithms for linear system solving.

Given a \((\alpha, a, \epsilon)\)-block encoding for a matrix \(A\) and a quantum state \(|b\rangle\), we can obtain a good approximation of \(A|b\rangle/\left\lVert Ab\right\rVert\) by first creating the state \(|0^a,b\rangle\) and then applying the block encoding of \(A\) to it. Then, we can amplify the part of the subspace associated with the state \(|0\rangle^{\otimes a}A|b\rangle\). Differently, one might use advanced amplification techniques and reach a similar result. This concept is detailed in the following lemma.

Lemma 5.4 (Applying a block-encoded matrix to a quantum state (Chakraborty, Gilyén, and Jeffery 2019)) Fix any \(\varepsilon \in (0,1/2)\). Let \(A \in \mathbb{C}^{N \times N}\) such that \(\left\lVert A\right\rVert\leq 1\) and \(|b\rangle\) a normalized vector in \(\mathbb{C}^N\), such that \(\left\lVert A|b\rangle\right\rVert \geq \gamma\). Suppose that \(|b\rangle\) can be generated in complexity \(T_b\) and there is a \((\alpha, a, \epsilon)\)-block encoding of \(A\) for some \(\alpha \geq 1\), with \(\epsilon \leq \varepsilon\gamma/2\), that can be implemented in cost \(T_A\). Then there is a quantum algorithm with complexity \[O\left(min(\frac{\alpha(T_A + T_b)}{\gamma}, \frac{\alpha T_A\log(1/\epsilon)+T_B}{\gamma})\right) \] that terminates with success probability at least \(2/3\), and upon success generates the state \(A|b\rangle/\left\lVert A|b\rangle\right\rVert\) to precision \(\varepsilon\).

For sake of completeness, we briefly discuss how to prove the first upper bound. Generating \(|b\rangle\) and applying the block encoding of \(A\) to it, we create a state that is \((\epsilon/\alpha)\)-close to: \[|0\rangle^{\otimes a}(\frac{1}{\alpha}A|b\rangle) + |0^\perp\rangle \] From the hypothesis, we know that \(\left\lVert\frac{1}{\alpha}A|b\rangle \right\rVert \geq \gamma/\alpha\). We can use \(O(\alpha/\gamma)\) calls to amplitude amplification on the initial register being \(|0\rangle^{\otimes a}\), to get \(\frac{\epsilon}{\gamma}\) close to \(|0\rangle^{\otimes a}\frac{A|b\rangle}{\left\lVert A\right\rVert|b\rangle}\). The second upper bound is shown by other techniques based on amplitude amplification of singular values of block encoded matrices (i.e. , ).

Regarding the usage of block-encodings for solving with a quantum computer a linear system of equations (i.e. multiplying a quantum state by the inverse of a matrix, and creating a state \(|x\rangle\) proportional to \(A^{-1}|b\rangle\)) we can proceed in an analogous way. First, we need to create block encoding access to \(A^{-1}\). Using the following lemma, (where they denoted with \(\kappa\) the condition number of \(A\)) we can implement negative powers of Hermitian matrices.

Lemma 5.5 (Implementing negative powers of Hermitian matrices (Chakraborty, Gilyén, and Jeffery 2019) lemma 9) Let \(c \in (0,\infty), \kappa \geq 2\), and let \(A\) be an Hermitian matrix such that \(I/\kappa \leq A \leq I\). Suppose that \(\delta = o(\epsilon/( \kappa^{1+c}(1+c) \log^3\frac{k^{1+c}}{\epsilon}))\) and \(U\) is an \((\alpha,a,\delta)\)-block encoding of \(A\) that can be implemented using \(T_U\) gates. Then, for any \(\epsilon\), we can implement a unitary \(\tilde{U}\) that is a \((2\kappa^c, a, \epsilon)\)-block encoding of \(H^{-c}\) in cost: \[O\left(\alpha \kappa(a+T_U)(1+c)\log^2(\frac{k^{1+c}}{\epsilon})\right)\]

Nevertheless, the algorithm that we can obtain by using the previous lemma has a quadratic dependence on \(\kappa\). To decrease it to an algorithm linear in \(\kappa\) the authors used variable time amplitude amplifications(A. Ambainis 2012). Hence, we can restate the theorem 5.9, with the improved runtimes, as follows.

Theorem 5.11 (Quantum linear algebra (Chakraborty, Gilyén, and Jeffery 2019),(Gilyén et al. 2019)) Let \(M := \sum_{i} \sigma_iu_iv_i^T \in \mathbb{R}^{d \times d}\) such that \(\left\lVert M\right\rVert_2 =1\), and a vector \(x \in \mathbb{R}^d\) for which we have quantum access in time \(T_\chi\). There exist quantum algorithms that with probability at least \(1-1/poly(d)\) return

- a state \(|z\rangle\) such that \(| |z\rangle - |Mx\rangle| \leq \epsilon\) in time \(\tilde{O}(\kappa(M)(\mu(M)+T_\chi)\log(1/\epsilon))\)

- a state \(|z\rangle\) such that \(||z\rangle - |M^{-1}x\rangle| \leq \epsilon\) in time \(\tilde{O}(\kappa(M)(\mu(M)+T_\chi)\log(1/\epsilon))\)

- a state \(|M_{\leq \theta, \delta}^+M_{\leq \theta, \delta}x\rangle\) in time \(\tilde{O}(T_\chi \frac{ \mu(M) \left\lVert x\right\rVert}{\delta \theta \left\lVert M^{+}_{\leq \theta, \delta}M_{\leq \theta, \delta}x\right\rVert})\)

One can also get estimates of the norms with multiplicative error \(\eta\) by increasing the running time by a factor \(1/\eta\).

This algorithm is leveraging Theorem 5.10 and the low-degree polynomial approximation of \(1/x\) in Appendix E.2.

Another important advantage of the new methods is that it provides easy ways to manipulate sums or products of matrices.

Theorem 5.12 (Quantum linear algebra for product of matrices (Chakraborty, Gilyén, and Jeffery 2019),(Gilyén et al. 2019)) Let \(M_1, M_2 \in \mathbb{R}^{d \times d}\) such that \(\left\lVert M_1\right\rVert_2= \left\lVert M_2\right\rVert_2 =1\), \(M=M_1M_2\), and a vector \(x \in \mathbb{R}^d\) for which we have quantum access. There exist quantum algorithms that with probability at least \(1-1/poly(d)\) return

- a state \(|z\rangle\) such that \(| |z\rangle - |Mx\rangle| \leq \epsilon\) in time \(\tilde{O}(\kappa(M)(\mu(M_1)+\mu(M_2))\log(1/\epsilon))\)

- a state \(|z\rangle\) such that \(||z\rangle - |M^{-1}x\rangle| \leq \epsilon\) in time \(\tilde{O}(\kappa(M)(\mu(M_1)+\mu(M_2))\log(1/\epsilon))\)

- a state \(|M_{\leq \theta, \delta}^+M_{\leq \theta, \delta}x\rangle\) in time \(\tilde{O}(\frac{ (\mu(M_1)+\mu(M_2)) \left\lVert x\right\rVert}{\delta \theta \left\lVert M^{+}_{\leq \theta, \delta}M_{\leq \theta, \delta}x\right\rVert})\)

One can also get estimates of the norms with multiplicative error \(\eta\) by increasing the running time by a factor \(1/\eta\).

More generally, applying a matrix \(M\) which is the product of \(k\) matrices, i.e. \(M=M_1\dots M_k\) will result in a runtime of \(\kappa(M)(\sum_i^k \mu(M_i) )\log(1/\epsilon)\) factors in the runtime.

5.4.4 Matrix inversion after HHL

In this section we briefly recap the progress that we had in the last decade for solving the quantum linear system problem (QLSP). First, we stress the fact that the problem solved by this algorithm is fundamentally different than solving a linear system of equation on a classical computer (i.e. when we obtain \(x=A^{-1}b\)), as with a classical computer, once we finish the computation we obtain a classical description of the vector \(x\). Instead, on a quantum computer we obtain a quantum state \(|x\rangle\).

For a few years the techniques developed in (Kerenidis and Prakash 2017) and (Kerenidis and Prakash 2020) were the state of the art

After Ambainis used variable-time amplitude amplification to reduce the dependence on the condition number from quadratic do linear, Childs et al. (Childs, Kothari, and Somma 2015) used the LCU framework, variable-time amplitude amplification, and the polynomial approximation of \(1/x\) to solve the QLSP with a runtime dependece on the condition number of \(O(\kappa(A)\log(\kappa))\), but also with an exponential improvement in the precision, i.e. now the error dependence appear as \(O(\log(1/\epsilon))\) in the runtime. The authors used quantum walks to represent \(x\) as linear combination of polynomials \(\sum_{i=1}^n \alpha_n T_n(x/d)\) where \(T_n\) is the Chebychev polynomial of the first kind, \(d\) is the sparsity of the matrix \(A\), and \(\alpha_i\) the coefficients of the polynomial expansions. For this, they had to give the first efficient polynomial approximation of \(1/x\) (Lemma 17,18,19 of (Childs, Kothari, and Somma 2015) ) that you can find explained in greater deatails in the Appendix E.2. Interestingly, the QLSP has been also studied in the adiabatic setting, first in (Subaşı, Somma, and Orsucci 2019), and later improved in (An and Lin 2022), and with eigenstate filtering (Lin and Tong 2020) to an optimal scaling of \(O(\kappa(A))\) (i.e. without a \(O(\log(\kappa(A)))\) factor in the runtime, which to our knowledge remains unbeated by other gate based quantum algorithms) (Costa et al. 2021).

Last but not least, matrix inversion can be seen as the problem of implementing the singular value transformation of \(x \mapsto 1/x\). For this, one needs to get a polynomial approximation of the function \(1/x\). While this might seem a simple task, there are small complications. First, one usually does not consider the whole interval \([-1, 1]\). In practice, one excludes the subset of the domain where the function has singularities (i.e. for \(1/x\) is around zero). It is preferable to pick a polynomial of a small degree, as the depth of the circuit depends linearly on the degree of the polynomial. In short with both LCU and QSVT we can use polynomial approximations to solve the QLSP problem. With LCU we have an additional multiplicative factor in the depth of the circuit compared to the QSVT framework, and some more ancillary qubits (Gribling, Kerenidis, and Szilágyi 2021).

A review of the progress made in the first 9 years after HHL can be found in (Dervovic et al. 2018) and (Gribling, Kerenidis, and Szilágyi 2021), from which we take this exercise. More recently, (Gribling, Kerenidis, and Szilágyi 2021) shows the latest techniques for the polynomial approximation of \(1/x\) (and other functions), which improves the polynomial approximation of (Childs, Kothari, and Somma 2015).

Exercise 5.3 In (Gribling, Kerenidis, and Szilágyi 2021) they say the following: \[\|Ax -b \| \leq \|x - A^{-1}b\| \leq \kappa\|Ax-b \| \] can you prove it?

5.5 Distances, inner products, norms, and quadratic forms

In this section, we report two lemmas that can be used to estimate the inner products, distances, and quadratic forms between vectors. The lemma 5.6 has been developed in the work (Kerenidis et al. 2019). The lemma 5.8 and the other lemmas for inner product estimation in the query model come from (Hamoudi et al. 2020).

5.5.1 Inner products and quadratic forms with KP-trees

We can rephrase this theorem saying that we have quantum access to the matrices, but for simplicity we keep the original formulation. Also, in the remark following the proof of this lemma, we give the runtime of the same algorithm, but when we compute all the distances in superposition. Thanks to the union bound, we incour only in a logarithmic cost.

Lemma 5.6 (Distance and Inner Products Estimation (Kerenidis et al. 2019)) Assume for a matrix \(V \in \mathbb{R}^{n \times d}\) and a matrix \(C \in \mathbb{R}^{k \times d}\) that the following unitaries \(|i\rangle|0\rangle \mapsto |i\rangle|v_i\rangle\), and \(|j\rangle|0\rangle \mapsto |j\rangle|c_j\rangle\) can be performed in time \(T\) and the norms of the vectors are known. For any \(\Delta > 0\) and \(\epsilon>0\), there exists a quantum algorithm that computes

- \(|i\rangle|j\rangle|0\rangle \mapsto |i\rangle|j\rangle|\overline{d^2(v_i,c_j)}\rangle\) where \(|\overline{d^{2}(v_i,c_j)}-d^{2}(v_i,c_j)| \leqslant \epsilon\) w.p. \(\geq 1-2\Delta\)

- \(|i\rangle|j\rangle|0\rangle \mapsto |i\rangle|j\rangle|\overline{(v_i,c_j)}\rangle\) where \(|\overline{(v_i,c_j)}-(v_i,c_j)| \leqslant \epsilon\) w.p. \(\geq 1-2\Delta\)

in time \(\widetilde{O}\left(\frac{ \left\lVert v_i\right\rVert\left\lVert c_j\right\rVert T \log(1/\Delta)}{ \epsilon}\right)\).

Proof. Let us start by describing a procedure to estimate the square \(\ell_2\) distance between the normalized vectors \(|v_i\rangle\) and \(|c_j\rangle\). We start with the initial state \[ |\phi_{ij}\rangle := |i\rangle |j\rangle \frac{1}{\sqrt{2}}(|0\rangle+ |1\rangle)|0\rangle \]

Then, we query the state preparation oracle controlled on the third register to perform the mappings \(|i\rangle|j\rangle|0\rangle|0\rangle \mapsto |i\rangle|j\rangle|0\rangle|v_i\rangle\) and \(|i\rangle|j\rangle|1\rangle|0\rangle \mapsto |i\rangle|j\rangle|1\rangle|c_j\rangle\). The state after this is given by, \[ \frac{1}{\sqrt{2}}\left( |i\rangle|j\rangle|0\rangle|v_i\rangle + |i\rangle|j\rangle|1\rangle|c_j\rangle\right) \] Finally, we apply an Hadamard gate on the the third register to obtain, \[|i\rangle|j\rangle\left( \frac{1}{2}|0\rangle\left(|v_i\rangle + |c_j\rangle\right) + \frac{1}{2}|1\rangle\left(|v_i\rangle -|c_j\rangle\right)\right)\] The probability of obtaining \(|1\rangle\) when the third register is measured is, \[ p_{ij} = \frac{1}{4}(2 - 2\langle v_i\rangle{c_j}) = \frac{1}{4} d^2(|v_i\rangle, |c_j\rangle) = \frac{1 - \langle v_i | c_j\rangle}{2}\] which is proportional to the square distance between the two normalized vectors.

We can rewrite \(|1\rangle\left(|v_i\rangle - |c_j\rangle\right)\) as \(|y_{ij},1\rangle\) (by swapping the registers), and hence we have the final mapping \[\begin{equation} A: |i\rangle|j\rangle |0\rangle \mapsto |i\rangle|j\rangle(\sqrt{p_{ij}}|y_{ij},1\rangle+\sqrt{1-p_{ij}}|G_{ij},0\rangle) \tag{5.1} \end{equation}\]

where the probability \(p_{ij}\) is proportional to the square distance between the normalized vectors and \(G_{ij}\) is a garbage state. Note that the running time of \(A\) is \(T_A=\tilde{O}(T)\).

Now that we know how to apply the transformation described in Equation (5.1), we can use known techniques to conclude our subroutine to perform the distance estimation within additive error \(\epsilon\) with high probability. The method uses two tools, amplitude estimation, and the median evaluation lemma D.2 from (Wiebe, Kapoor, and Svore 2018), which is a quantum version of the well-known powering-lemma C.1.

First, using amplitude estimation (theorem 5.4 ) with the unitary \(A\) defined in Equation (5.1), we can create a unitary operation that maps \[ \mathcal{U}: |i\rangle|j\rangle |0\rangle \mapsto |i\rangle|j\rangle \left( \sqrt{\alpha} | \overline{p_{ij}}, G, 1\rangle + \sqrt{ (1-\alpha ) }|G', 0\rangle \right) \] where \(G, G'\) are garbage registers, \(|\overline{p_{ij}} - p_{ij} | \leq \epsilon\) and \(\alpha \geq 8/\pi^2\). The unitary \(\mathcal{U}\) requires \(P\) iterations of \(A\) with \(P=O(1/\epsilon)\). Amplitude estimation thus takes time \(T_{\mathcal{U}} = \widetilde{O}(T/\epsilon)\). We can now apply theorem D.2 for the unitary \(\mathcal{U}\) to obtain a quantum state \(|\Psi_{ij}\rangle\) such that,

\[\||\Psi_{ij}\rangle-|0\rangle^{\otimes L}|\overline{p_{ij}}, G\rangle\|_2\le \sqrt{2\Delta}\]

The running time of the procedure is \(O( T_{\mathcal{U}} \ln(1/\Delta)) = \widetilde{O}( \frac{T }{\epsilon}\log (1/\Delta) )\).

Note that we can easily multiply the value \(\overline{p_{ij}}\) by 4 in order to have the estimator of the square distance of the normalized vectors or compute \(1-2\overline{p_{ij}}\) for the normalized inner product. Last, the garbage state does not cause any problem in calculating the minimum in the next step, after which this step is uncomputed. The running time of the procedure is thus \(O( T_{\mathcal{U}} \ln(1/\Delta)) = O( \frac{T }{\epsilon}\log (1/\Delta) )\). The last step is to show how to estimate the square distance or the inner product of the unnormalized vectors. Since we know the norms of the vectors, we can simply multiply the estimator of the normalized inner product with the product of the two norms to get an estimate for the inner product of the unnormalized vectors and a similar calculation works for the distance. Note that the absolute error \(\epsilon\) now becomes \(\epsilon \left\lVert v_i\right\rVert\left\lVert c_j\right\rVert\) and hence if we want to have in the end an absolute error \(\epsilon\) this will incur a factor of \(\left\lVert v_i\right\rVert\left\lVert c_j\right\rVert\) in the running time. This concludes the proof of the lemma.

Remark. Lemma 5.6 can be used to compute \(\sum_{i,j=1}^{n,d} |i\rangle|\overline {\langle v_i,c_j \rangle} \rangle\) where every \(\overline {\langle v_i,c_j \rangle}\) has error \(\epsilon\) with an additional multiplicative cost of \(O(\log(nd))\).

Exercise 5.4 Can you use the Union bound (i.e. Theorem C.1 ) to prove the following remark? The solution can be found in (Bellante and Zanero 2022).

It is relatively simple to extend the previous algorithm to one that computes an estimate of a quadratic form. We will consider the case where we have quantum access to a matrix \(A\) and compute the quadratic forms \(v^TAv\) and \(v^TA^{-1}v\). The extension to the case when we have two different vectors, i.e. \(v^TAu\) and \(v^TA^{-1}u\) is trivial.

Lemma 5.7 (Estimation of quadratic forms) Assume to have quantum access to a symmetric positive definite matrix \(A \in \mathbb{R}^{n \times n}\) such that \(\left\lVert A\right\rVert\leq 1\), and to a matrix \(V \in \mathbb{R}^{n \times d}\). For \(\epsilon > 0\), there is a quantum algorithm that performs the mapping \(|i\rangle|0\rangle \mapsto |i\rangle|\overline{s_i}\rangle\), for \(|s_i - \overline{s_i}| \leq \epsilon\), where \(s_i\) is either:

- \((|v_i\rangle,A|v_i\rangle)\) in time \(O(\frac{\mu(A)}{\epsilon})\)

- \((|v_i\rangle,A^{-1}|v_i\rangle)\) in time \(O(\frac{\mu(A)\kappa(A)}{\epsilon})\)

The algorithm can return an estimate of \(\overline{(v_i, Av_i)}\) such that \(\overline{(v_i, Av_i)} - (v_i, Av_i) \leq \epsilon\) using quantum access to the norm of the rows of \(V\) by increasing the runtime by a factor of \(\left\lVert v_i\right\rVert^2\).

Proof. We analyze first the case where we want to compute the quadratic form with \(A\), and after the case for \(A^{-1}\). Recall that the matrix \(A\) can be decomposed in an orthonormal basis \(|u_i\rangle\). We can use theorem 5.11 to perform the following mapping:

\[\begin{align} |i\rangle|v_i\rangle|0\rangle = |i\rangle\frac{1}{N_i}\sum_j^n \alpha_{ij}|u_j\rangle|0\rangle \mapsto |i\rangle\frac{1}{N_i} \sum_i^n \Big(\lambda_i\alpha_{ij}|u_i,0\rangle+ \sqrt{1-\gamma^2}|G,1\rangle \Big) =\\ |i\rangle \Big(\left\lVert Av_i\right\rVert|Av_i,0\rangle+\sqrt{1-\gamma^2}|G,1\rangle \Big) =|i\rangle|\psi_i\rangle, \end{align}\]

where \(N_i = \sqrt{\sum_j^n \alpha_{ij}^2}\). We define \(|\phi_i\rangle = |v_i,0\rangle\). Using controlled operations, we can then create the state:

\[\begin{equation}\label{eq:quadatic A} \frac{1}{2}|i\rangle\left(|0\rangle(|\phi_i\rangle+|\psi_i\rangle) + |1\rangle(|\phi_i\rangle-|\psi_i\rangle) \right) \end{equation}\]

It is simple to check that, for a given register \(|i\rangle\), the probability of measuring \(0\) is: \[p_i(0) = \frac{1+\left\lVert Av_i\right\rVert\langle Av_i|v_i\rangle}{2}\]

We analyze the case where we want to compute the quadratic form for \(A^{-1}\). For a \(C = O(1/\kappa(A))\), we create instead the state: \[\begin{align}\label{eq:quadatic A minus 1} |i\rangle\frac{1}{\sqrt{\sum_i^n \alpha_i^2}} \sum_i^n \Big(\frac{C}{\lambda_i}\alpha_i|v_i,0\rangle + \sqrt{1-\gamma^2}|G,1\rangle \Big) = |i\rangle|\psi_i\rangle \end{align}\]

\[\begin{equation} U_2 |i\rangle|0\rangle \mapsto \frac{1}{2}|i\rangle \left(\sqrt{\alpha}|p_i(0),y_i,0\rangle + \sqrt{1-\alpha}|G_i,1\rangle \right) \end{equation}\]

and estimate \(p_i(0)\) such that \(|p_i(0) - \overline{p_i(0)}| < \epsilon\) for the case of \(v_i^TAv_i\) and we choose a precision \(\epsilon/C\) for the case of \(v_i^TA^{-1}v_i\) to get the same accuracy. Amplitude estimation theorem, i.e. theorem @ref(thm:ampest_orig) fails with probability \(\leq \frac{8}{\pi^2}\). The runtime of this procedure is given by combining the runtime of creating the state \(|\psi_i\rangle\), amplitude estimation, and the median lemma. Since the error in the matrix multiplication step is negligible, and assuming quantum access to the vectors is polylogarithmic, the final runtime is \(O(\log(1/\delta)\mu(A)\log(1/\epsilon_2) / \epsilon)\), with an additional factor \(\kappa(A)\) for the case of the quadratic form of \(A^{-1}\).

Note that if we want to estimate a quadratic form of two unnormalized vectors, we can just multiply this result by their norms. Note also that the absolute error \(\epsilon\) now becomes relative w.r.t the norms, i.e. \(\epsilon \left\lVert v_i\right\rVert^2\). If we want to obtain an absolute error \(\epsilon'\), as in the case with normalized unit vectors, we have to run amplitude estimation with precision \(\epsilon'=O(\epsilon/\left\lVert v_i\right\rVert^2)\). To conclude, this subroutine succeeds with probability \(1-\gamma\) and requires time \(O(\frac{\mu(A) \log (1/\gamma) \log (1/\epsilon_?)}{\epsilon_1})\), with an additional factor of \(\kappa(A)\) if we were to consider the quadratic form for \(A^{-1}\), and an additional factor of \(\left\lVert v_i\right\rVert^2\) if we were to consider the non-normalized vectors \(v_i\). This concludes the proof of the lemma.

Note that this algorithm can be extended by using another index register to query for other vectors from another matrix \(W\), for which we have quantum access. This extends the capabilities to estimating inner products in the form \(|i\rangle|j\rangle|w_i^TA v_i\rangle\).

5.5.2 Inner product and l1-norm estimation with query access

Lemma 5.8 (Quantum state preparation and norm estimation) Let \(\eta >0\). Given a non-zero vector \(u \in [0,1]^N\), with \(\max_j u_j = 1\). Given quantum access to \(u\) via the operation \(|j\rangle |\bar 0\rangle \to |j\rangle | u_j\rangle\) on \(O(\log N + \log 1/ \eta)\) qubits, where \(u_j\) is encoded to additive accuracy \(\eta\). Then:

- There exists a unitary operator that prepares the state \(\frac{1}{\sqrt{N}} \sum_{j=1}^N |j\rangle \left( \sqrt{ u_j } |0\rangle + \sqrt{1- u_j} |1\rangle \right)\) with two queries and number of gates \(O(\log N + \log 1/\eta)\). Denote this unitary by \(U_\chi\).

- Let \(\epsilon >0\) such that \(\eta \leq \epsilon/ (2N)\) and \(\delta \in (0,1)\). There exists a quantum algorithm that provides an estimate \(\Gamma_u\) of the \(\ell_1\)-norm \(\Vert u \Vert_1\) such that \(\left \vert \Vert u \Vert_1 - \Gamma_u\right \vert \leq \epsilon \Vert u \Vert_1\), with probability at least \(1-\delta\). The algorithm requires \(O(\frac{\sqrt{ N}}{\epsilon} \log(1/\delta))\) queries and \(\widetilde{O}(\frac{\sqrt{ N }}{\epsilon} \log\left (1/\delta\right))\) gates.

- Let \(\xi \in (0,1]\) such that \(\eta \leq \xi /4N\) and \(\delta \in(0,1)\). An approximation \(| \tilde p\rangle = \sum_{j=1}^N \sqrt{ \tilde p_j }|j\rangle\) to the state \(|u\rangle := \sum_{j=1}^N \sqrt{ \frac{u_j}{\Vert u \Vert_1}}|j\rangle\) can be prepared with probability \(1-\delta\), using \(O(\sqrt{N} \log (1/\delta))\) calls to the unitary of (i) and \(\widetilde{O}(\sqrt{N} \log (1/\xi)\log \left (1/\delta \right))\) gates. The approximation in \(\ell_1\)-norm of the probabilities is \(\left \Vert \tilde p - \frac{u}{\Vert u\Vert_1} \right\Vert_1 \leq \xi\).

Proof. For the first point, prepare a uniform superposition of all \(|j\rangle\) with \(O(\log N)\) Hadamard gates. With the quantum query access, perform \[\frac{1}{\sqrt{N}} \sum_{j=1}^N |j\rangle |\bar 0\rangle \to \frac{1}{\sqrt{N}} \sum_{j=1}^N |j\rangle | u_j\rangle | 0\rangle\] \[\to \frac{1}{\sqrt{N}} \sum_{j=1}^N |j\rangle | u_j\rangle \left( \sqrt{ u_j} |0\rangle + \sqrt{1-u_j } |1\rangle \right).\]

The steps consist of an oracle query and a controlled rotation. The rotation is well-defined as \(u_j \leq 1\) and costs \(O( \log 1/\eta)\) gates. Then uncompute the data register \(|u_j\rangle\) with another oracle query.

For part 2, define a unitary \(\mathcal U = U_\chi \left(\mathbb{1} - 2 |\bar 0\rangle\langle\bar 0|\right) \left(U_\chi\right)^\dagger\), with \(U_\chi\) from part 1, and here \(\mathbb{1}\) is the identiy matrix. Define another unitary by \(\mathcal V = \mathbb {1}-2 \mathbb{1} \otimes |0\rangle \langle 0|\). Using \(K\) applications of \(\mathcal U\) and \(\mathcal V\), amplitude estimation 5.4 allows to provide an estimate \(\tilde a\) of the quantity \(a = \frac{\Vert u \Vert_1}{N}\) to accuracy \(\vert \tilde{a} - a \vert \leq 2 \pi \frac{\sqrt{a(1-a)} }{K}+ \frac{\pi^2}{K^2}\). Following the idea in (Apeldoorn et al. 2020) (of dividing the elements that we are summing using amplitude estimation by their maximum, that we can find using the finding the minimum subroutine), take \(K> \frac{6 \pi }{\epsilon} \sqrt{ N}\), which obtains \[\begin{align} \left| \tilde a - a \right| &\leq& \frac{\pi}{K}\left( 2\sqrt{a}+ \frac{\pi}{K} \right) < \frac{\epsilon}{6 } \sqrt{ \frac{1}{N } } \left( 2\sqrt{a}+ \frac{\epsilon }{6}\sqrt{ \frac{1}{N } } \right) \nonumber \\ &\leq& \frac{\epsilon}{6 } \sqrt{ \frac{1}{N} } \left( 3 \sqrt{a} \right) = \frac{\epsilon \sqrt{\Vert u \Vert_1} }{2 N}. \end{align}\] Since \(\Vert u \Vert_1 \geq 1\) by assumption, we have \(\left| \tilde{a} - a \right| \leq \frac{\epsilon \Vert u \Vert_1 }{2 N}\).

Also, there is an inaccuracy arising from the additive error \(\eta\) of each \(u_j\). As it was assumed that \(\eta\leq \epsilon / (2N)\), the overall multiplicative error \(\epsilon\) is obtained for the estimation. For performing a single run of amplitude estimation with \(K\) steps, we require \(O(K) =O(\frac{\sqrt{ N }}{\epsilon} )\) queries to the oracles and \(O\left(\frac{\sqrt{ N }}{\epsilon} \left(\log N + \log (N / \epsilon) \right) \right)\) gates.

For part 3, rewrite the state from part 1 as \[\begin{align} \sqrt{ \frac{{\Vert u \Vert_1} }{N}} \sum_{j=1}^N& \sqrt{ \frac{u_j }{{\Vert u \Vert_1}}} |j\rangle |0\rangle + \sqrt{1-\frac{{\Vert u \Vert_1}}{N }} \sum_{j=1}^N \sqrt{\frac{1- u_j }{N -{\Vert u \Vert_1}} } |j\rangle |1\rangle.\nonumber \end{align}\] Now amplify the \(|0\rangle\) part using Amplitude Amplification (Brassard et al. 2002) via the exponential search technique without knowledge of the normalization, to prepare \(\sum_{j=1}^N |j\rangle \sqrt{ \frac{u_j}{\Vert u \Vert_1}}\) with success probability \(1-\delta\). The amplification requires \(O(\sqrt{ \frac{N }{ \Vert u \Vert_1}}\log (1/\delta))= O(\sqrt N \log (1/\delta))\) calls to the unitary of part 1, as \({\Vert u \Vert_1} \geq 1\). The gate complexity derives from the gate complexity of part 1.

Denote the \(\eta\)-additive approximation to \(u_j\) by \(\tilde u_j\), and evaluate the \(\ell_1\)-distance of the probabilities. First, \(\left \vert \Vert u\Vert_1 - \Vert \tilde u\Vert_1\right \vert \leq N \eta\). One obtains \(\left \Vert \tilde p - \frac{u}{\Vert u\Vert_1} \right \Vert_1 =\left \Vert \frac{\tilde u}{{\Vert \tilde u\Vert_1}} - \frac{u}{\Vert u\Vert_1} \right \Vert_1 \leq \sum_j \left \vert \frac{\tilde u_j}{{\Vert \tilde u\Vert_1}} - \frac{u_j}{{\Vert \tilde u\Vert_1}} \right \vert + \sum_j \left \vert \frac{ u_j}{{\Vert \tilde u\Vert_1}} - \frac{u_j}{\Vert u\Vert_1}\right \vert \leq \frac{N \eta} {{\Vert \tilde u\Vert_1}} + \frac{ N \eta } {{\Vert \tilde u\Vert_1}}\).

We also obtain \[\frac{1}{ {\Vert \tilde u\Vert_1} } \leq \frac{1}{ \Vert u\Vert_1 -N\eta } \leq \frac{2}{ \Vert u\Vert_1}\] for \(\eta \leq \Vert u\Vert_1 / 2 N.\)$ Since \(\eta \leq \Vert u\Vert_1 \xi/(4N)\), the distance is \(\left \Vert \tilde p - \frac{u}{\Vert u\Vert_1} \right \Vert_1 \leq \xi\) as desired.

Lemma 5.9 (Quantum inner product estimation with relative accuracy) Let \(\epsilon,\delta \in(0,1)\). Given quantum access to two vectors \(u,v \in [0,1]^N\), where \(u_j\) and \(v_j\) are encoded to additive accuracy \(\eta= O{1/N}\). Then, an estimate \(I\) for the inner product can be provided such that \(\vert I - u\cdot v /\Vert u\Vert_1 \vert \leq \epsilon\ u\cdot v /\Vert u\Vert_1\) with success probability \(1-\delta\). This estimate is obtained with \(O\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right )\right)\) queries and \(\widetilde{O}\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) quantum gates.

Proof. Via lemma 5.2, determine \(u_{\max}\) with success probability \(1-\delta\) with \(O\left(\sqrt N \log \frac{1}{\delta}\right)\) queries and \(\widetilde{O}\left(\sqrt N \log \left( \frac{1}{\delta}\right )\right)\) quantum gates.

Apply lemma 5.8 with the vector \(\frac{u}{ u_{\max}}\) to obtain an estimate \(\Gamma_u\) of the norm \(\left \Vert \frac{ u}{ u_{\max}} \right \Vert_1\) to relative accuracy \(\epsilon_u= \epsilon/2\) with success probability \(1-\delta\).

This estimation takes \(O(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) )\) queries and \(\widetilde{O}(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) )\) quantum gates.

Define the vector \(z\) with \(z_j = u_j v_j\). Via lemma 5.2, determine \(z_{\max}\) with success probability \(1-\delta\) with \(O\left(\sqrt N \log \frac{1}{\delta}\right)\) queries and \(\widetilde{O}\left(\sqrt N \log \left( \frac{1}{\delta}\right )\right)\) quantum gates. If \(z_{\max} = 0\) up to numerical accuracy, the estimate is \(I = 0\) and we are done. Otherwise, apply lemma 5.8 with the vector \(\frac{ z}{ z_{\max}}\) to obtain an estimate \(\Gamma_z\) of the norm \(\left \Vert \frac{ z}{ z_{\max}} \right \Vert_1\) to relative accuracy \(\epsilon_z = \epsilon/2\) with success probability \(1-\delta\). This estimation takes \(O\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) queries and \(\widetilde{O}\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right) \right)\) quantum gates.

With lemma D.1, which gives a nice bound for the ratio between two relative errors, we have \[\begin{align} \left \vert \frac{\Gamma_z}{\Gamma_u} - \frac{u_{\max}}{z_{\max}}\frac{u\cdot v}{\Vert u\Vert_1} \right \vert &\leq& \frac{u_{\max}}{z_{\max}} \frac{u\cdot v }{\Vert u\Vert_1 } \frac{\epsilon_z + \epsilon_u}{ (1-\epsilon_u)} \\ &\leq& 2\epsilon \frac{u_{\max}}{z_{\max}} \frac{u\cdot v }{\Vert u\Vert_1 }, \end{align}\] since \(\epsilon_u < 1/2\). Set \[\begin{align} I= \frac{z_{\max}}{u_{\max}} \frac{\Gamma_z}{\Gamma_u}, \end{align}\] and we have \(\vert I - u\cdot v /\Vert u\Vert_1 \vert \leq 2\epsilon\ u\cdot v /\Vert u\Vert_1\). The total success probability of the four probabilistic steps is at least \(1-4\delta\) via a union bound (theorem C.1). Choosing \(\epsilon \to \epsilon/2\) and \(\delta \to \delta/4\) leads to the result.

Lemma 5.10 (Quantum inner product estimation with additive accuracy) Let \(\epsilon,\delta \in(0,1)\). Given quantum access to a non-zero vector \(u \in [0,1]^N\) and another vector \(v \in [-1,1]^N\), where \(u_j\) and \(v_j\) are encoded to additive accuracy \(\eta= O{1/N}\). Then, an estimate \(I\) for the inner product can be provided such that \(\vert I - u\cdot v / \Vert u\Vert_1 \vert \leq \epsilon\) with success probability \(1-\delta\). This estimate is obtained with \(O\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) queries and \(\widetilde{O}\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) quantum gates.

Note that as a byproduct, the value \(u_{\max}\) and an estimate of \(\Vert u /u_{\max} \Vert_1\) with relative accuracy \(\epsilon\) can be provided with probability at least \(1-\delta\).

Proof. Via lemma 5.2, determine \(\Vert u\Vert_{\max}\) with success probability \(1-\delta\) with \(O\left(\sqrt N \log \frac{1}{\delta}\right)\) queries and \(\widetilde{O}\left(\sqrt N \log \left( \frac{1}{ \eta}\right) \log \left( \frac{1}{\delta}\right )\right)\) quantum gates. Apply lemma 5.8 with the vector \(\frac{u}{ u_{\max}}\) to obtain an estimate \(\Gamma_u\) of the norm \(\left \Vert \frac{ u}{ u_{\max}} \right \Vert_1\) to relative accuracy \(\epsilon_u= \epsilon/2\) with success probability \(1-\delta\).

This estimation takes \(O\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) queries and \(\widetilde{O}\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) quantum gates.

Similarily, consider the vector \(z\) with elements \(z_j := u_j \left (v_j+3\right) \in [0,4]\). Determine \(\Vert z\Vert_{\max}\) with success probability \(1-\delta\) with \(O\left(\sqrt N \log \frac{1}{\delta}\right)\) queries and \(\widetilde{O}\left(\sqrt N \log \left( \frac{1}{\delta}\right )\right)\) quantum gates. Apply lemma 5.8 with the vector \(z/ z_{\max}\) to obtain an estimate \(\Gamma_z\) of the norm \(\Vert z/ z_{\max} \Vert_1\) to relative accuracy \(\epsilon_z = \epsilon/2\) with success probability \(1-\delta\).

This estimation takes \(O\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\) queries and \(\widetilde{O}\left(\frac{ \sqrt{N} }{\epsilon} \log \left (\frac{1}{\delta} \right ) \right)\).

It takes some steps to see that the exact quantities are related via \[\begin{align} \frac{u\cdot v}{\Vert u\Vert_1} = \frac{z_{\max} }{u_{\max} } \frac{ \Vert \frac{z}{ z_{\max}} \Vert_1 } { \Vert \frac{ u}{ u_{\max}} \Vert_1} - 3. \end{align}\] Considering the estimator \(I = \frac{z_{\max} }{u_{\max} } \frac{ \Gamma_z } { \Gamma_u} - 3\), from lemma D.1, we have \[\begin{align} \left \vert I - \frac{u\cdot v}{ \Vert u\Vert_1 } \right \vert &=& \frac{z_{\max} }{u_{\max} } \left \vert \frac{ \Gamma_z } { \Gamma_u} - \frac{ \Vert \frac{z}{ z_{\max}} \Vert_1 } { \Vert \frac{ u}{ u_{\max}} \Vert_1} \right \vert \\ &\leq& \frac{ \epsilon_u + \epsilon_{z}}{1- \epsilon_u} \frac{ \Vert z \Vert_1 } { \Vert u\Vert_1} \leq 8 \epsilon. \nonumber \end{align}\]

In the last steps we have used the observation that \[\begin{align} \frac{ \Vert z \Vert_1 } { \Vert u\Vert_1} \equiv \frac{\sum_j u_j (v_j+3)}{\sum_j u_j } \leq\frac{4 \sum_j u_j }{\sum_j u_j } = 4, \end{align}\] and \(\epsilon_u < 1/2\). All steps together take \(O\left(\frac{\sqrt N}{\epsilon} \log \frac{1}{\delta}\right)\) queries and \(\widetilde{O}\left(\frac{\sqrt N}{\epsilon} \log \left( \frac{1}{\delta}\right )\right)\) gates. The total success probability of all the probabilistic steps is at least \(1-4\delta\) via a union bound. Choosing \(\epsilon \to \epsilon/8\) and \(\delta \to \delta/4\) leads to the result.

5.6 Hamiltonian simulation

These notes based on Childs’ Lecture notes, i.e. (Andrew 2017), Section 5

5.6.1 Introduction to Hamiltonians

The only way possible to start a chapter on Hamiltonian simulation would be to start from the work of Feynman, who had the first intuition on the power of quantum mechanics for simulating physics with computers. We know that the Hamiltonian dynamics of a closed quantum system, weather its evolution changes with time or not is given by the Schrödinger equation:

\[\frac{d}{dt}|\psi(t)\rangle = H(t)|\psi(t)\rangle\]

Given the initial conditions of the system (i.e. \(|\psi(0)\rangle\) ) is it possible to know the state of the system at time \(t: |\psi(t)\rangle = e^{-i (H_1t/m)}|\psi(0)\rangle\).

As you can imagine, classical computers are supposed to struggle to simulate the process that builds \(|\psi(t)\rangle\), since this equation describes the dynamics of any quantum system, and we don’t think classical computers can simulate that efficiently for any general Hamiltonian \(H\). But we understood that quantum computers can help to simulate the dynamics of another quantum system.

Why we might want to do that?

An example would be the following. Imagine you are a quantum machine learning scientist, and you have just found a new mapping between an optimization problem and an Hamiltonian dynamics, and you want to use quantum computer to perform the optimization (Otterbach et al. 2017). You expect a quantum computers to run the Hamiltonian simulation for you, and then sample useful information from the resulting quantum sate. This result might be fed again into your classical algorithm to perform ML related task, in a virtuous cycle of hybrid quantum-classical computation, which we will discuss more in another chapter.

Another example, perhaps more akin to the original scope of Hamiltonian simulation, is related to quantum chemistry. Imagine that are a chemist, and you have developed a hypothesis for the Hamiltonian dynamics of a chemical compound. Now you want to run some experiments to see if the formula behaves according to the experiments (and you cannot simulate numerically the experiment). Or maybe you are testing the properties of complex compounds you don’t want to synthesize.

We can formulate the problem of Hamiltonian simulation in this way:

Definition 5.3 (Hamiltonian simulation problem) Given a state \(|\psi(0)\rangle\) and an Hamiltonian \(H\), obtain a state \(\overline{|\psi(t)\rangle}\) such that \(\|\overline{|\psi(t)\rangle}-e^{-iHt}|\psi(0)\rangle\| \leq \epsilon\) in some norm.

(Note that also this problem can be reformulated in the context of density matrices, where usually the trace norm is used as a distance between quantum states).

This leads us to the definition of efficiently simulable Hamiltonian:

Definition 5.4 (Hamiltonian simulation) Given a state \(|\psi(0)\rangle\) and an Hamiltonian \(H\) acting on \(n\) qubits, we say \(H\) can be efficiently simulated if, \(\forall t \geq 0, \forall \epsilon \geq 0\) there is a quantum circuit \(U\) such that \(\| U - e^{-iHt}\| < \epsilon\) using a number of gates that is polynomial in \(n,t, 1/\epsilon\).