Chapter 9 Dimensionality reduction

We devote this chapter to the study of quantum algorithms for dimensionality reduction. We first delve into unsupervised methods, and then look at the supervised case. In both case we discuss two main subroutines. In one case we can use quantum computers to fit a machine learning model. This means that we can obtain, from a quantum computer, a classical description of the machine learning model (i.e. a set of vectors). In the second case we can imagine using a quantum computer to perform the mapping from an original feature space to the dimensionality-reduced feature space.

9.1 Unsupervised algorithms

In this section we describe three algorithms: the QPCA, QCA and QLSA. These algorithms are mainly from (Bellante and Zanero 2022), which solves in a single framework many of these eigenvalue problems that appears in fitting some simple machine learning models.

9.1.1 Quantum PCA

Principal component analysis is a widely-used multivariate statistical method for continuous variables that finds many applications in machine learning, ranging from outlier detection to dimensionality reduction and data visualization. Consider a matrix \(A \in \mathbb{R}^{n \times m}\) that stores information about \(n\) data points using \(m\) coordinates (e.g. think of \(n\) images described through \(m\) pixels each), its principal components are the set of orthogonal vectors along which the variance of the data points is maximized. The goal of PCA is to compute the principal components, with the amount of variance they capture, and rotate the data-points to make the axis coincide with the principal components. During the process it is possible to perform dimensionality reduction and reduce the number of variables taken into account, i.e. reducing \(A \in \mathbb{R}^{n \times m}\) to \(A \in \mathbb{R}^{n \times k}\) where \(k\leq m\), and express the original data in terms of fewer latent variables that account for the majority of the variance of the original data. If it is possible to find a few latent variables that retain a large amount of variance of the original variables, it is possible to use PCA to visualize even high dimensional datasets.

9.1.1.0.1 Connection to Singular Value Decomposition

The model of PCA is closely related to the singular value decomposition of the data matrix \(A\), shifted to row mean 0. The principal components coincide with the right singular vectors \(v_i\). The factor scores \(\lambda_i = \sigma_i^2\) represent the amount of variance along each of them and the factor score ratios \(\lambda^{(i)}=\frac{\lambda_i}{\sum_j^r\lambda_j}\) express the percentage of the variance retained. For datasets with \(0\) mean, the transformation consists in a rotation along the principal components: \(Y = AV = U\Sigma V^TV = U\Sigma \in \mathbb{R}^{n \times m}\). Therefore, the data points in the new subspace can be computed using the left singular vectors and the singular values of \(A\). When performing dimensionality reduction it suffice to use only the top \(k\) singular values and vectors \(Y^{(k)} = AV^{(k)} = U\Sigma V^TV^{(k)} = U^{(k)}\Sigma^{(k)} \in \mathbb{R}^{n \times m}\).

9.1.1.0.2 Quantum algorithms for PCA

Using the procedures from section 7.2 it is possible to extract the model for principal component analysis. Theorems 7.3, 7.4, ?? allow to retrieve information on the factor scores and on the factor score ratios, while Theorem 7.5 allows extracting the principal components. The run-time of the model extraction is the sum of the run-times of the theorems: \(\widetilde{O}\left(\left( \frac{1}{\gamma^2} + \frac{km}{\theta\delta^2}\right)\frac{\mu(A)}{\epsilon}\right)\). The model comes with the following guarantees: \(\|\sigma_i - \overline{\sigma}_i\| \leq \frac{\epsilon}{2}\); \(\|\lambda_i - \overline{\lambda}_i\| \leq \epsilon\); \(\|\lambda^{(i)} - \overline{\lambda}^{(i)}\| \leq \gamma\); \(\|v_i - \overline{v}_i\| \leq \delta\) for \(i \in \{0,k-1\}\). This run-time is generally smaller than the number of elements of the input data matrix, providing polynomial speed-ups on the best classical routines for non-sparse matrices. In writing the time complexity of the routines, we omitted the term \(\frac{1}{\sqrt{p}}\) because usually the amount of variance to retain \(p\) is chosen to be a number greater than 0.5 (generally in the order of 0.8/0.9).

When performing dimensionality reduction, the goal is to obtain the matrix \(Y=U\Sigma \in \mathbb{R}^{n\times k}\), where \(U \in \mathbb{R}^{n \times k}\) and \(\Sigma \in \mathbb{R}^{k \times k}\) are composed respectively by the top \(k\) left singular vectors and singular values. When this is the case, the user might want to extract the top \(k\) \(u_i\) and \(\sigma_i\) rather than the principal components, to avoid matrix multiplication. For this reason, we provide a lemma to bound the error on the retrieved mode. For sake of completeness, the error bound is also stated for \(V\Sigma\).

Lemma 9.1 (Accuracy of qPCA's representation (classical)) Let \(A \in \mathbb{R}^{n \times m}\) be a matrix with \(\sigma_{max} \leq 1\). Given some approximate procedures to retrieve estimates \(\overline{\sigma}_i\) of the singular values \(\sigma_i\) such that \(\|\sigma_i - \overline{\sigma}_i\| \leq \epsilon\) and unit estimates \(\overline{u}_i\) of the left singular vectors \(u_i\) such that \(\|\overline{u}_i - u_i\|_2 \leq \delta\), the error on \(U\Sigma\) can be bounded as \(\|U\Sigma - \overline{U}\overline{\Sigma}\|_F \leq \sqrt{k}(\epsilon+\delta)\). Similarly, \(\| V\Sigma - \overline{V}\overline{\Sigma}\|_F \leq \sqrt{k}(\epsilon+\delta)\).

Proof. The first step of the proof consists in bounding the error on the columns of the matrices: \(||\overline{\sigma_i}\overline{u}_i - \sigma_i u_i||\).

\[\|\overline{\sigma}_i \overline{u}_i - \sigma_i u_i\| \leq \|(\sigma_i \pm \epsilon)\overline{u}_i - \sigma_i u_i\| = \|\sigma_i (\overline{u}_i - u_i) \pm \epsilon\overline{u}_i \|\] Because of the triangular inequality, \(\|\sigma_i (\overline{u}_i - u_i) \pm \epsilon\overline{u}_i \| \leq \sigma_i\|\overline{u}_i - u_i\| + \epsilon\|\overline{u}_i \|\). Also by hypothesis, \(\|(\overline{u}_i - u_i)\| \leq \delta\) and \(||\overline{u}_i|| = 1\) . Thus, \(\sigma_i\| \overline{u}_i - u_i\| + \epsilon\|\overline{u}_i \| \leq \sigma_i \delta + \epsilon\). From the error bound on the columns and the fact that \(f(x) = \sqrt{x}\) is an increasing monotone function, it is possible to prove the error bound on the matrices: \[\|\overline{U}\overline{\Sigma} - U\Sigma\|_F = \sqrt{\sum_i^n\sum_j^k \| \overline{\sigma}_j\overline{u}_{ij} - \sigma_j u_{ij} \|^2} = \sqrt{\sum_j^k \left( \| \overline{\sigma}_j\overline{u}_j - \sigma_j u_j \| \right)^2} \] \[\leq \sqrt{\sum_j^k \left( \epsilon + \delta\sigma_j \right)^2} \leq \sqrt{k \left( \epsilon + \delta\sigma_{max} \right)^2} \leq \sqrt{k}(\epsilon + \delta\|A\|)\]

Finally, since \(\sigma_{max} \leq 1\), we get that \(\|\overline{U}\overline{\Sigma} - U\Sigma\|_F \leq \sqrt{k}(\epsilon + \delta)\).

The fact that the matrix has been normalized to have a spectral norm smaller than one is usually not relevant for the final applications of PCA. However, if one desires to represent transformation of the not-normalized matrix \(A\), the error bounds become the ones of the lemma below.

Lemma 9.2 (Non-normalized accuracy of qPCA's representation (classical)) The estimated representations of the lemma above, for the not-normalized matrix \(A\), are \(\|A\|\overline{U}\overline{\Sigma}\) and \(\|A\|\overline{V}\overline{\Sigma}\). The error bounds become \(\left|\left|||A||U\Sigma - ||A||\overline{U}\overline{\Sigma}\right|\right|_F \leq \sqrt{k}||A||(\epsilon+\delta)\) and \(\left|\left|||A||V\Sigma - ||A||\overline{V}\overline{\Sigma}\right|\right|_F \leq \sqrt{k}||A||(\epsilon+\delta)\)

Proof. Firstly, it is easy to see that to get the desired data representations it suffices to multiply the output matrix by \(\|A\|\). Indeed, in our quantum memory, the singular values of the non-normalized matrix are scaled by a factor \(\frac{1}{\|A\|}\), while the singular vectors remain the same. To prove the bounds, we can just multiply both sides of the inequalities from Lemma 9.1 by \(||A||\), which is a positive quantity: \[\left\lVert\|A\|\overline{U}\overline{\Sigma} - ||A||U\Sigma\right\rVert_F \leq \sqrt{k}\|A\|(\epsilon + \delta), \left\lVert\|A\|\overline{V}\overline{\Sigma} - \|A\|V\Sigma\right\rVert_F \leq \sqrt{k}\|A\|(\epsilon + \delta).\]

Based on Lemma 5.4, we also provide algorithms to produce quantum states proportional to the data representation in the new feature space. After \(V^{(k)} \in \mathbb{R}^{m\times k}\) has been extracted, these routines create the new data points in almost constant time and are therefore useful when these states are used in a quantum machine learning pipeline.

Corollary 9.1 (qPCA: vector dimensionality reduction) Let \(\xi\) be a precision parameter. Let there be efficient quantum access to the top \(k\) right singular vectors \(\overline{V}^{(k)} \in \mathbb{R}^{m \times k}\) of a matrix \(A = U \Sigma V^T \in \mathbb{R}^{n \times m}\), such that \(\|V^{(k)} - \overline{V}^{(k)}\| \leq \frac{\xi}{\sqrt{2}}\). Given efficient quantum access to a row \(a_i\) of \(A\), the quantum state \(|\overline{y}_{i}\rangle = \frac{1}{\|\overline{y}_i\|}\sum_i^k \overline{y}_k |i\rangle\), proportional to its projection onto the PCA space, can be created in time \(\widetilde{O}\left(\frac{\|a_i\|}{\|\overline{y}_i\|}\right)\) with probability at least \(1-1/\text{poly}(m)\) and precision \(\||y_i\rangle - |\overline{y}_i\rangle\| \leq \frac{\|a_i\|}{\|\overline{y}_i\|}\xi\). An estimate of \(\|\overline{y}_i\|\), to relative error \(\eta\), can be computed in \(\widetilde{O}(1/\eta)\).

Proof. In the proof we use \(V\) to denote the matrix \(V^{(k)} \in \mathbb{R}^{m\times k}\). Given a vector \(a_i\), its projection onto the k-dimensional PCA space of \(A\) is \(y_i^T = a_i^T V\), or equivalently \(y_i = V^Ta_i\). Note that \(\|y_i\| = \|V^Ta_i\|\). It is possible to use Lemma 5.4 to multiply the quantum state \(|a_i\rangle\) by \(V^T\), appropriately padded with 0s to be a square \(\mathbb{R}^{m\times m}\) matrix. Lemma 5.4 states that it is possible to create an approximation \(|\overline{y}_i\rangle\) of the state \(|y_i\rangle = |V^Ta_i\rangle\) in time \(\widetilde{O}\left(\frac{\mu(V^T)\log(1/\epsilon)}{\gamma}\right)\) with probability \(1-1/\text{poly}(m)\), such that \(\||y_i\rangle - |\overline{y}_i\rangle\| \leq \epsilon\). Since \(V^T\) has rows with unit \(\ell_2\) norm, we can use a result from Theorem IV.1 of (Kerenidis and Prakash 2020) to prepare efficient quantum access to it with \(\mu(V^T)=1\). Choosing the parameter as \(\gamma = \|V^Ta_i\|/\|a_i\|\), we get a run-time of \(\widetilde{O}(\frac{\|a_i\|}{\|y_i\|}\log(1/\epsilon))\). We can consider the term \(\log(1/\epsilon)\) to be negligible, as, for instance, an error \(\epsilon = 10^{-17}\) would not be relevant in practice, while accounting for a factor \(17\) in the run-time. We conclude that the state \(|y_i\rangle\) can be created in time \(\widetilde{O}\left(\frac{\|a_i\|}{\|y_i\|}\right)\) with probability \(1-1/\text{poly}(m)\) and that its norm can be estimated to relative error \(\eta\) in time \(\widetilde{O}\left(\frac{\|a_i\|}{\|y_i\|}\frac{1}{\eta}\right)\).

For what concerns the error, we start by bounding \(\|y_i - \overline{y}_i\|\) and use Lemma D.4 to bound the error on the quantum states. We assume to have estimates \(\overline{v}_i\) of the columns of \(V\) such that \(\|v_i - \overline{v}_i\| \leq \delta\).

\[\| V - \overline{V} \|_F = \sqrt{\sum_i^n\sum_j^k \left(v_{ij} - \overline{v}_{ij} \right)^2} \leq \sqrt{k}\delta\]

Considering that \(\|y_i - \overline{y}_i\| = \|a_i^TV^{(k)} - a_i^T\overline{V}^{(k)}\| \leq \|a_i\|\sqrt{k}\delta\), we can use Lemma D.4 to state

\[\||y_i\rangle - |\overline{y}_i\rangle\| \leq \frac{\|a_i\|}{\|y_i\|}\sqrt{2k}\delta = \frac{\|a_i\|}{\|y_i\|}\xi.\] We can set \(\delta = \frac{\xi}{\sqrt{2k}}\) and require \(\| V - \overline{V} \|_F \leq \frac{\xi}{\sqrt{2}}\).

This result also holds when \(a_i\) is an new data point, not necessarily stored in \(A\). Note that \(\frac{\|y_i\|}{\|a_i\|}\) is expected to be close to \(1\), as it is the percentage of support of \(a_i\) on the new feature space spanned by \(V^{(k)}\). We formalize this better using Definition 9.1 below.

Corollary 9.2 (qPCA: matrix dimensionality reduction) Let \(\xi\) be a precision parameter and \(p\) be the amount of variance retained after the dimensionality reduction. Let there be efficient quantum access to \(A= U\Sigma V^T \in \mathbb{R}^{n \times m}\) and to its top k right singular vectors \(\overline{V}^{(k)} \in \mathbb{R}^{m \times k}\), such that \(\|V^{(k)} - \overline{V}^{(k)}\| \leq \frac{\xi\sqrt{p}}{\sqrt{2}}\). There exists a quantum algorithm that, with probability at least \(1-1/\text{poly}(m)\), creates the state \(|\overline{Y}\rangle = \frac{1}{\|Y\|_F}\sum_i^n \|y_{i,\cdot}\||i\rangle|y_{i,\cdot}\rangle\), proportional to the projection of \(A\) in the PCA subspace, with error \(\||Y\rangle - |\overline{Y}\rangle\| \leq \xi\) in time \(\widetilde{O}(1/\sqrt{p})\). An estimate of \(\|\overline{Y}\|_F\), to relative error \(\eta\), can be computed in \(\widetilde{O}(\frac{1}{\sqrt{p}\eta})\).

Proof. In the proof we use \(V\) to denote the matrix \(V^{(k)} \in \mathbb{R}^{m\times k}\). Using the same reasoning as the proof above and giving a closer look at the proof of Lemma 5.4 (Lemma 24 (Chakraborty, Gilyén, and Jeffery 2019)), we see that it is possible to create the state \(|0\rangle(\overline{V}^T|a_i\rangle) + |0_\perp\rangle\) in time \(\widetilde{O}(1)\) and that the term \(\widetilde{\frac{1}{\gamma}}\) is introduced to boost the probability of getting the right state. If we apply Lemma 5.4 without the amplitude amplification step to the superposition of the rows of \(A\), we obtain the following mapping in time \(\widetilde{O}(1)\): \[\begin{align} |A\rangle = \frac{1}{\|A\|_F} \sum_i^n \|a_{i,\cdot}\||i\rangle |a_{i,\cdot}\rangle \mapsto \frac{1}{\|A\|_F} \sum_i^n (\|y_{i,\cdot}\||0\rangle|i\rangle|y_{i,\cdot}\rangle + \|y_{i,\cdot\perp}\||0_\perp\rangle), \end{align}\] where \(\|y_{i,\cdot\perp}\|\) are normalization factors. Keeping in mind that \(\|A\|_F = \sqrt{\sum_i^r \sigma_i^2}\) and \(\|Y\|_F = \sqrt{\sum_i^n \|y_{i,\cdot}\|^2} = \sqrt{\sum_i^k \sigma_i^2}\), we see that the amount of explained variance is \(p = \frac{\sum_i^k \sigma_i^2}{\sum_j^r \sigma_j^2}=\left(\frac{\|Y\|_F}{\|A\|_F}\right)^2\). The probability of obtaining \(|Y\rangle = \frac{1}{\|Y\|_F} \sum_i^n \|y_{i,\cdot}\||i\rangle|y_{i,\cdot}\rangle\) is \(p = \frac{\|Y\|_F^2}{\|A\|_F^2} = \frac{\sum_i^n \|y_{i,\cdot}\|^2}{\|A\|_F^2}\). We conclude that, using \(\widetilde{O}(1/\sqrt{p})\) rounds of amplitude amplification, we obtain \(|Y\rangle\) with proability \(1-1/\text{poly}(m)\) (5.1).

Considering that \(\|Y - \overline{Y}\| = \|AV^{(k)} - A\overline{V}^{(k)}\| \leq \|A\|\sqrt{k}\delta\), we can use Lemma D.4 to state \[\begin{align} \||Y\rangle - |\overline{Y}\rangle\| \leq \frac{\|A\|_F}{\|Y\|_F}\sqrt{2k}\delta = \xi. \end{align}\] We can set \(\delta = \frac{\xi}{\sqrt{2k}}\frac{\|Y\|_F}{\|A\|_F} = \frac{\xi\sqrt{p}}{\sqrt{2k}}\), so we require \(\| V - \overline{V} \|_F \leq \frac{\xi\sqrt{p}}{\sqrt{2}}\).

The error requirements of the two corollary propagate to the run-time of the model extraction in the following way.

Corollary 9.3 (qPCA: fitting time) Let \(\epsilon\) be a precision parameter and \(p=\frac{\sum_{i: \overline{\sigma}_i \geq \theta} \sigma_i^2}{\sum_j^r \sigma_j^2}\) the amount of variance to retain, where \(\|\sigma_i - \overline{\sigma}_i\|\leq \epsilon\). Given efficient quantum access to a matrix \(A \in \mathbb{R}^{n \times m}\), the run-time to extract \(V^{(k)} \in \mathbb{R}^{m \times k}\) for corollaries 9.1, @ref{cor:qpca:matrix} is \(\widetilde{O}\left(\frac{\mu(A)k^2m}{\theta\epsilon\xi^2}\right)\).

We state the proof for Corollary 9.2, as its error is more demanding than the one of Corollary 9.1. This way, the proof stands for both cases. ::: {.proof} The procedure to train the model consists in using Theorem ?? to extract the threshold \(\theta\), given the amount of variance to retain \(p\), and to leverage Theorem 7.5 to extract the \(k\) right singular vectors that compose \(V \in \mathbb{R}^{m \times k}\). The run-time of Theorem ?? is smaller than the one of Theorem 7.5, so we can focus on the last one. From the proof of Corollary 9.2, we know that to have \(\|V - \overline{V} \|_F \leq \frac{\xi\sqrt{p}}{\sqrt{2}}\) we need \(\|v_i - \overline{v}_i\| \leq \frac{\xi\sqrt{p}}{\sqrt{2k}}\). Substituting \(\delta = \frac{\xi\sqrt{p}}{\sqrt{2k}}\) in the run-time of Theorem 7.5, we get \(\widetilde{O}(\frac{\mu{A}k^2m}{p^{3/2}\theta\epsilon\xi^2})\). If we consider that \(p\) to be a reasonable number (e.g., at least grater than 0.05), we can consider it a constant factor that is independent from the input’s size. The asymptotic run-time is proved to be \(\widetilde{O}(\frac{\mu{A}k^2m}{\theta\epsilon\xi^2})\). :::

We see that is the algorithm is training the model for Corollary 9.2, the run-time has a dependency on \(1/p^{3/2}\), but this term is constant and independent from the size of the input dataset. With this additional \(1/p^{3/2}\) cost, the error of Corollary 9.1 drops to \(\xi\) for every row of the matrix and generally decreases in case of new data points.

Definition 9.1 (PCA-representable data) A set of \(n\) data points described by \(m\) coordinates, represented through a matrix \(A=\sum_i^r\sigma_i u_iv_i^T \in \mathbb{R}^{n \times m}\) is said to be PCA-representable if there exists \(p \in [\frac{1}{2},1], \varepsilon \in [0, 1/2], \beta \in [p-\varepsilon, p+\varepsilon], \alpha \in [0,1]\) such that: - \(\exists k \in O(1)\) such that \(\frac{\sum_i^k \sigma^2_i}{\sum_i^m \sigma^2_i}= p\) - for at least \(\alpha n\) points \(a_i\) it holds \(\frac{\left\lVert y_i\right\rVert}{\left\lVert a_i\right\rVert} \geq \beta\), where \(\left\lVert y_i\right\rVert = \sqrt{\sum_i^k |\langle a_i|v_j\rangle|^2} \left\lVert a_i\right\rVert\).

Thanks to the this statement, it is possible to bound the run-time of Corollary 9.1 with a certain probability.

Lemma 9.3 (qPCA on PCA-representable datasets) Let \(a_i\) be a row of \(A \in \mathbb{R}^{n\times d}\). Then, the runtime of Corollary 9.1 is \(\frac{\left\lVert a_i\right\rVert}{\left\lVert\overline{y}_i\right\rVert} = \frac{1}{\beta} = O(1)\) with probability greater than \(\alpha\).

It is known that, in practical machine learning datasets, \(\alpha\) is a number fairly close to one. We have tested the value of \(\rho\) for the MNIST dataset, the interested reader can read more about it in the section about the experiments. Using the same framework and proof techniques, it is possible to produce similar results for the representations of Correspondence Analysis and Latent Semantic Analysis, that are introduced in the next two sections.

Remark: Note that Theorem 1 from (Yu et al. 2019) propose a lower bound for a quantity similar to \(\alpha\). However, their result seems to be a loose bound: using their notation and setting \(\eta=1, \theta=1\) they bound this quantity with \(0\), while a tight bound should be \(1\).

9.1.2 Quantum Correspondence Analysis

Correspondence analysis is a multivariate statistical tool, from the family of factor analysis methods, used to explore relationships among categorical variables. Consider two random variables \(X\) and \(Y\) with possible outcomes in \(\{x_1, \cdots, x_n\}\) and \(\{y_1, \cdots, y_m\}\) respectively, the model of Correspondence Analysis allows to represent the outcomes as vectors in two related Euclidean spaces. These spaces can be used for analysis purposes in data visualization, exploration and in other unsupervised machine learning tasks.

9.1.2.0.1 Connection to Singular Value Decomposition

Given a contingency table for \(X\) and \(Y\), it is possible to compute the matrix \(A = D_X^{-1/2}(\hat{P}_{X,Y} - \hat{p}_X\hat{p}_Y^T)D_Y^{-1/2} \in \mathbb{R}^{n \times m}\), where \(\hat{P}_{X,Y} \in \mathbb{R}^{n \times m}\) is the estimated matrix of joint probabilities, \(\hat{p}_X \in R^n\) and \(\hat{p}_X \in \mathbb{R}^m\) are the vectors of marginal probabilities, and \(D_X^{-1/2} = diag(\hat{p}_X)\), \(D_Y^{-1/2} = diag(\hat{p}_Y)\). The computation of \(A\) can be done in time proportional to the number of non zero entries of the contingency table. The singular value decomposition of \(A\) is strictly related to the model of correspondence analysis (Greenacre 1984), (Hsu, Salamatian, and Calmon 2019). The vector space for \(X\) is \(D_X^{-1/2}U \in \mathbb{R}^{n\times k}\), while the one for \(Y\) is \(D_Y^{-1/2}V\in \mathbb{R}^{m\times k}\). Note that these spaces are not affected by the normalization of \(A\). Like in PCA, it is possible to choose only a subset of the orthogonal factors as coordinates for the representation. Factor scores and factor score ratios measure of how much ``correspondence’’ is captured by the respective orthogonal factor, giving an estimate of the quality of the representation.

9.1.2.0.2 Quantum algorithms for CA

Similarly to what we have already discussed, it is possible to extract the model for CA by creating quantum access to the matrix \(A\) and using Theorems 7.3, 7.4, and 7.5 to extract the orthogonal factors, the factor scores and the factor score ratios in time \(\widetilde{O}\left(\left( \frac{1}{\gamma^2} + \frac{k(n+m)}{\theta\delta^2}\right)\frac{\mu(A)}{\epsilon}\right)\). We provide a theoretical bound for the data representations in Lemma 9.4.

Lemma 9.4 (Accuracy of qCA's representation (classical)) Let \(A \in \mathbb{R}^{n \times m}\) be a matrix. Given some approximate procedures to retrieve unit estimates \(\overline{u}_i\) of the left singular vectors \(u_i\) such that \(\|\overline{u}_i - u_i\| \leq \delta\), the error on \(D_{X}^{-1/2}U\) can be bounded as \(\left|\left| D_{X}^{-1/2}U- D_{X}^{-1/2}\overline{U}\right|\right|_F \leq \|D_{X}^{-1/2}\|_F\sqrt{k}\delta\). Similarly, \(\left|\left|D_{Y}^{-1/2}V - D_{Y}^{-1/2}\overline{V}\right|\right|_F \leq \|D_{Y}^{-1/2}\|_F\sqrt{k}\delta.\)

Proof. \[\left|\left|D_X^{-1/2}\overline{U} - D_X^{-1/2}U\right|\right|_F \leq \|D_X^{-1/2}\|_F\left|\left|\overline{U} - U\right|\right|_F \leq \|D_X^{-1/2}\|_F\sqrt{k}\delta.\]

Note that CA’s representation does is independent of the scaling of the singular vectors, so the normalization of the dataset does not affect the representation in any way.

9.1.3 Quantum Latent Semantic Analysis

Latent semantic analysis is a data representation method to represent words and text documents as vectors in Euclidean spaces so that it is possible to make comparisons among terms, among documents and between terms and documents. The representation spaces of LSA automatically model synonymy and polysemy (Deerwester et al. 1990), and their applications in machine learning range from topic modeling to document clustering and retrieval.

9.1.3.0.1 Connection to Singular Value Decomposition

The input of LSA is a contingency table of \(n\) words and \(m\) documents \(A \in \mathbb{R}^{n \times m}\). Inner products of rows \(AA^T=U\Sigma ^2U^T\) express the distances between words. Inner products of columns \(A^TA=V\Sigma ^2V^T\) express the distances between documents. The \(a_{ij}\) element of \(A=U\Sigma V^T\) expresses the distance between word \(i\) and document \(j\). With this definitions it is possible to compute: - a space for words comparisons \(U\Sigma \in \mathbb{R}^{n\times k}\); - a space for documents comparisons \(V\Sigma \in \mathbb{R}^{m\times k}\); - two spaces for words and documents comparisons \(U\Sigma ^{1/2} \in \mathbb{R}^{n\times k}\) and \(V\Sigma ^{1/2} \in \mathbb{R}^{m\times k}\). When using LSA for latent semantic indexing, one wishes to represent the query as a vector in the document comparison space. The new vector is computed in the following way \(v_q^T = x_q^TU\Sigma ^{-1}\), where \(x_q \in \mathbb{R}^{n}\) is obtained using the same criteria used to store a document in \(A\). The orthogonal factors used to compare documents can be seen as latent topics whose importance is proportional to the corresponding factor score ratios.

9.1.3.0.2 Quantum algorithms for LSA

As previously discussed, the cost of model extraction is \(\widetilde{O}\left(\left( \frac{1}{\gamma^2} + \frac{k(n+m)}{\theta\delta^2}\right)\frac{\mu(A)}{\epsilon}\right)\). In some applications, such as document retrieval, the data analyst maintains a fixed number of singular values and vectors, regardless of the factor score ratios. In (Deerwester et al. 1990), \(k=100\) is found to be a good number for document indexing. Similarly, we believe that it is possible to empirically determine a threshold \(\theta\) to use in practice. Determining such threshold would reduce the complexity of model computation to the one of Theorem 7.5: \(\widetilde{O}\left( \frac{k(n+m)}{\theta\delta^2}\frac{\mu(A)}{\epsilon}\right)\).

For what concerns the error bounds on the retrieved data representation models, we already know from Lemma 9.1 that it is possible to retrieve an approximation \(\overline{U\Sigma }\) and \(\overline{V\Sigma }\) with precision \(\sqrt{k}(\delta + \epsilon)\), where \(\delta\) is the precision on the singular vectors and \(\epsilon\) the precision on the singular values. To provide bounds on the estimations of \(U\Sigma ^{1/2}\), \(V\Sigma ^{1/2}\), and \(U\Sigma ^{-1}\) we introduce Lemma 9.5 and Lemma @ref{lem:accuracyUE-1eVE-1}.

Lemma 9.5 (Accuracy of qLSA's representations (classical)) Let \(A \in \mathbb{R}^{n \times m}\) be a matrix with \(\sigma_{max} \leq 1\). Given some approximate procedures to retrieve estimates \(\overline{\sigma}_i\) of the singular values \(\sigma_i\) such that \(\|\overline{\sigma}_i - \sigma_i\| \leq \epsilon\) and unitary estimates \(\overline{u}_i\) of the left singular vectors \(u_i\) such that \(\|\overline{u}_i - u_i\| \leq \delta\), the error on \(U\Sigma ^{1/2}\) can be bounded as \(\left|\left| U\Sigma ^{1/2} - \overline{U}\overline{\Sigma }^{1/2} \right|\right|_F \leq \sqrt{k}\left(\delta + \frac{1}{2\sqrt{\theta}}\right)\). Similarly, \(\left|\left| V\Sigma ^{1/2} - \overline{V}\overline{\Sigma }^{1/2} \right|\right|_F \leq \sqrt{k}\left(\delta + \frac{1}{2\sqrt{\theta}}\right)\).

Proof. We start by bounding \(\|\sqrt{\overline{\sigma}_i} - \sqrt{\sigma_i}\|\). Let’s define \(\epsilon = \gamma \sigma_i\) as a relative error: \[ \|\sqrt{\sigma_i + \epsilon} - \sqrt{\sigma_i}\| = \|\sqrt{\sigma_i + \gamma \sigma_i} - \sqrt{\sigma_i}\| = \|\sqrt{\sigma_i}(\sqrt{1 + \gamma} - 1)\| =\] \[ \sqrt{\sigma_i}\left\lVert\frac{(\sqrt{1 + \gamma} - 1)(\sqrt{1 + \gamma} + 1)}{\sqrt{1 + \gamma} + 1}\right\rVert = \sqrt{\sigma_i}\left\lVert\frac{\gamma + 1 - 1}{\sqrt{1 + \gamma} + 1}\right\rVert \leq \sqrt{\sigma_i}\frac{\gamma}{2}.\]

By definition \(\gamma = \frac{\epsilon}{\sigma_i}\) and we know that \(\sigma_{min} \geq \theta\): \[ \|\sqrt{\overline{\sigma}_i} - \sqrt{\sigma_i}\| \leq \frac{\sqrt{\sigma_i}}{\sigma_1}\frac{\epsilon}{2} = \frac{\epsilon}{2\sqrt{\sigma_i}} \leq \frac{\epsilon}{2\sqrt{\theta}}. \]

Using the bound on the square roots, we can bound the columns of \(\overline{U}\overline{\Sigma }^{1/2}\): \[ \left\lVert\sqrt{\overline{\sigma_i}}\overline{u}_i - \sqrt{\sigma_i}u_i\right\rVert \leq \left\lVert\left(\sqrt{\sigma_i} + \frac{\epsilon}{2\sqrt{\theta}}\right)\overline{u}_i - \sqrt{\sigma_i}u_i\right\rVert = \] \[ \left\lVert\sqrt{\sigma_i}(\overline{u}_i - u_i) + \frac{\epsilon}{2\sqrt{\theta}}\overline{u}_i\right\rVert \leq \sqrt{\sigma_i}\delta + \frac{\epsilon}{2\sqrt{\theta}} \leq \delta\sqrt{\|A\|} + \frac{\epsilon}{2\sqrt{\theta}}. \]

From the error bound on the columns we derive the bound on the matrices: \[ \left\lVert\overline{U}\overline{\Sigma }^{1/2} - U\Sigma ^{1/2}\right\rVert_F = \sqrt{\sum_j^k \left( \left\lVert \sqrt{\overline{\sigma}_j}\overline{u}_j - \sqrt{\sigma_j} u_j \right\rVert \right)^2} \leq \sqrt{k}\left(\delta\sqrt{\|A\|} + \frac{\epsilon}{2\sqrt{\theta}}\right). \]

Finally, since \(\sigma_{max} \leq 1\), we get that \(\|\overline{U}\overline{\Sigma }^{1/2} - U\Sigma ^{1/}\|_F \leq \sqrt{k}(\delta + \frac{\epsilon}{2\sqrt{\theta}})\).

Lemma 9.6 (Accuracy of qLSA's representation for new document queries (classical)) Let \(A \in \mathbb{R}^{n \times m}\) be a matrix. Given some approximate procedures to retrieve estimates \(\overline{\sigma}_i\) of the singular values \(\sigma_i\) such that \(|\overline{\sigma}_i - \sigma_i| \leq \epsilon\) and unitary estimates \(\overline{u}_i\) of the left singular vectors \(u_i\) such that \(\|\overline{u}_i - u_i\| \leq \delta\), the error on \(U\Sigma ^{-1}\) can be bounded as \(\left|\left| U\Sigma ^{-1} - \overline{U}\overline{\Sigma }^{-1} \right|\right|_F \leq \sqrt{k}\left(\frac{\delta}{\theta} + \frac{\epsilon}{\theta^2 - \theta\epsilon}\right)\). Similarly, \(\left|\left| V\Sigma ^{-1} - \overline{V}\overline{\Sigma }^{-1} \right|\right|_F \leq \sqrt{k}(\frac{\delta}{\theta} + \frac{\epsilon}{\theta^2 - \theta\epsilon})\).

Proof. We start by bounding \(\| \frac{1}{\overline{\sigma_i}} - \frac{1}{\sigma_i}\|\), knowing that \(\sigma_{min} \geq \theta\) and \(\epsilon < \theta\): \[ \left|\left|\frac{1}{\overline{\sigma}_i} - \frac{1}{\sigma_i}\right|\right| \leq \left|\left|\frac{1}{\sigma_i - \epsilon} - \frac{1}{\sigma_i}\right|\right| \leq \frac{\epsilon}{\theta^2 - \theta\epsilon}. \]

From the bound on the inverses, we can obtain the bound on the columns of \(\overline{U}\overline{\Sigma }^{-1}\): \[ \left\lVert\frac{1}{\overline{\sigma_i}}\overline{u}_i - \frac{1}{\sigma_i}u_i\right\rVert \leq \left\lVert\left(\frac{1}{\sigma_i} \pm \frac{\epsilon}{\theta^2 - \theta\epsilon}\right)\overline{u}_i - \frac{1}{\sigma_i}u_i\right\rVert \leq \frac{1}{\sigma_i}\delta + \frac{\epsilon}{\theta^2 - \theta\epsilon} \leq \frac{\delta}{\theta} + \frac{\epsilon}{\theta^2 - \theta\epsilon}. \]

To end the proof, we can compute the bound on the matrices: \[ \left\lVert\overline{U}\overline{\Sigma }^{-1} - U\Sigma ^{-1}\right\rVert_F = \sqrt{\sum_j^k \left( \left\lVert\frac{1}{\overline{\sigma}}_j\overline{u}_{j} - \frac{1}{\sigma_j} u_{j} \right\rVert \right)^2} \leq \sqrt{k}\left(\frac{\delta}{\theta} + \frac{\epsilon}{\theta^2 - \theta\epsilon}\right). \]

As for qPCA, we provide the bounds in case we want to undo data normalization step. The proofs for these lemmas proceeds like the one of Lemma 9.2.

Lemma 9.7 (Non-normalized accuracy of qLSA's representations (classical)) The estimated representations of Lemma 9.5, for the not-normalized matrix \(A\), are \(\sqrt{\|A\|}~\overline{U}\overline{\Sigma }^{1/2}\) and \(\sqrt{\|A\|}~\overline{V}\overline{\Sigma }^{1/2}\). The error bounds become \(\left|\left|\sqrt{\|A\|}~\overline{U}\overline{\Sigma }^{1/2} - \sqrt{\|A\|}U\Sigma ^{1/2}\right|\right|_F \leq \sqrt{k\|A\|}(\epsilon+\delta)\) and \(\left|\left|\sqrt{\|A\|}~\overline{V}\overline{\Sigma }^{1/2} - \sqrt{\|A\|}V\Sigma ^{1/2}\right|\right|_F \leq \sqrt{k\|A\|}(\epsilon+\delta)\).

Lemma 9.8 (Non-normalized accuracy of qLSA's representation for new document queries (classical)) The estimated representations of Lemma 9.6, for the not-normalized matrix \(A\), are \(\frac{1}{||A||}\overline{U}\overline{\Sigma }^{-1}\) and \(\frac{1}{||A||}\overline{V}\overline{\Sigma }^{-1}.\) The error bounds become \(\left|\left|\frac{1}{||A||}\overline{U}\overline{\Sigma }^{-1} - \frac{1}{||A||}U\Sigma ^{1/2}\right|\right| \leq \frac{\sqrt{k}}{||A||}\left(\frac{\delta}{\theta} + \frac{\epsilon}{\theta^2 - \theta\epsilon}\right)\) and \(\left|\left|\frac{1}{||A||}\overline{V}\overline{\Sigma }^{-1} - \frac{1}{||A||}V\Sigma ^{1/2}\right|\right| \leq \frac{\sqrt{k}}{||A||}\left(\frac{\delta}{\theta} + \frac{\epsilon}{\theta^2 - \theta\epsilon}\right)\).

9.2 Supervised algorithms

9.2.1 Quantum Slow Feature Analysis

Slow Feature Analysis (SFA) is a dimensionality reduction technique proposed in the context of computational neurosciences as a way to model part of the visual cortex of humans. In the last decades, it has been applied in various areas of machine learning. In this chapter we propose a quantum algorithm for slow feature analysis, and detail its application for performing dimensionality reduction on a real dataset. We also simulate the random error that the quantum algorithms might incur. We show that, despite the error caused by the algorithm, the estimate of the model that we obtain is good enough to reach high accuracy on a standard dataset widely used as benchmark in machine learning. Before providing more details on this result, we give a brief description of dimensionality reduction and introduce the model of slow feature analysis in this context.

SFA has been shown to model a kind of neuron (called complex cell) situated in the cortical layer in the primary visual cortex (called V1) (P. Berkes and Wiskott 2005). SFA can be used in machine learning as a DR algorithm, and it has been successfully applied to enhance the performance of classifiers (Zhang Zhang and Dacheng Tao 2012), (Pietro Berkes 2005). SFA was originally proposed as an (Wiskott Laurenz and Wiskott 1999). Its task was to learn slowly varying features from generic input signals that vary rapidly over time (Pietro Berkes 2005) (Wiskott Laurenz and Wiskott 1999). SFA has been motivated by the , that postulates that while the primary sensory receptors (like the retinal receptors in an animal’s eye) are sensitive to very small changes in the environment and thus vary on a very fast time scale, the internal representation of the environment in the brain varies on a much slower time scale. The slowness principle is a hypothesis for the functional organization of the visual cortex and possibly other sensory areas of the brain (Wiskott et al. 2011) and it has been introduced as a way to model the transformation invariance in natural image sequences (Zhang Zhang and Dacheng Tao 2012). SFA is an algorithm that formalizes the slowness principle as a nonlinear optimization problem. In (Blaschke and Wiskott 2004, sprekeler2014extension), SFA has been used to do nonlinear blind source separation. Although SFA has been developed in the context of computational neurosciences, there have been many applications of the algorithm to solve ML related tasks. A prominent advantage of SFA compared to other algorithms is that it is almost hyperparameter-free. The only parameters to chose are in the preprocessing of the data, e.g. the initial PCA dimension and the nonlinear expansion that consists of a choice of a polynomial of (usually low) degree \(p\). Another advantage is that it is guaranteed to find the optimal solution within the considered function space (Escalante-B and Wiskott 2012). For a detailed description of the algorithm, we suggest (Sprekeler and Wiskott 2008). With appropriate preprocessing, SFA can be used in conjunction to a supervised algorithm to acquire classification capabilities. For instance it has been used for pattern recognition to classify images of digits in the famous MNIST database (Pietro Berkes 2005). SFA can be adapted to be used to solve complex tasks in supervised learning, like face and human action recognition (Gu, Liu, and Wang 2013) , [Zhang Zhang and Dacheng Tao (2012; L. Sun et al. 2014).

We can use SFA for classification in the following way. One can think of the training set a set of vectors \(x_i \in \mathbb{R}^d , i \in n\). Each \(x_i\) belongs to one of \(K\) different classes. A class \(T_k\) has \(|T_k|\) vectors in it. The goal is to learn \(K-1\) functions \(g_j( x_i), j \in [K-1]\) such that the output \(y_i = [g_1( x_i), \cdots , g_{K-1}( x_i )]\) is very similar for the training samples of the same class and largely different for samples of different classes. Once these functions are learned, they are used to map the training set in a low dimensional vector space. When a new data point arrive, it is mapped to the same vector space, where classification can be done with higher accuracy. SFA projects the data points onto the subspace spanned by the eigenvectors associated to the \(k\) smallest eigenvalues of the derivative covariance matrix of the data, which we define in the next section.

9.2.1.1 The SFA model

Now we introduce the optimization problem in its most general form as it is commonly stated for classification (Pietro Berkes 2005). Let \(a=\sum_{k=1}^{K} {\binom{ |T_k| }{2}}.\) For all \(j \in [K-1]\), minimize: \[\Delta(y_j) = \frac{1}{a} \sum_{k=1}^K \sum_{\substack{s,t \in T_k \\ s<t}} \left( g_j( x_s) - g_j( x_t) \right)^2\] with the following constraints:

- \(\frac{1}{n} \sum_{k=1}^{K}\sum_{i\in T_k} g_j( x_i) = 0 \quad \forall j \in [K-1]\)

- \(\frac{1}{n} \sum_{k=1}^{K}\sum_{i \in T_k} g_j( x_i)^2 = 1 \quad \forall j \in [K-1]\)

- \(\frac{1}{n} \sum_{k=1}^{K}\sum_{i \in T_k} g_j( x_i)g_v( x_i) = 0 \quad \forall v < j\)

The minimization of the delta values \(\Delta(y_j)\) encodes the requirement on the output signal to vary “as slow as possible”, and thus the delta values are our measure of slowness. They are the average of the square of the first order derivative (over time) of the \(j\)-th component of the output signal \(y_t\). The first requirement states that the average over time of each component of the signal should be zero, and it is stated just for convenience, such that the other two requirements take a simple form. The second requirement asks for the variance over time of each component of the signal to be \(1\). It is equivalent to saying that each signal should carry some information and avoid the trivial solution \(g_j(\cdot) = 0\). The third requirement is to say that we want the signals to be decorrelated with each other. This also introduces an order, such that the first signal is the slowest, the second signal is the second slowest and so on. The first and the second constraint also avoid the trivial solution \(y_i = 0\). Intuitively, the decorrelation constraint forces different functions \(g_j\) to encode different ``aspects’’ of the input, maximizing the information carried by the output signal.

In order for the minimization problem to be computationally feasible at scale, the \(g_j\)’s are restricted to be linear functions \(w_j\) such that the output signal becomes \(y_i = [w_1^T x_i, \cdots w_{K-1}^T x_i ]^T\) or else \(Y=XW\), where \(X \in \mathbb{R}^{n \times d}\) is the matrix with rows the input samples and \(W \in \mathbb{R}^{d \times (K-1)}\) the matrix that maps the input matrix \(X\) into a lower dimensional output \(Y \in \mathbb{R}^{n \times (K-1)}\). In case it is needed to capture nonlinear relations in the dataset, one performs a standard nonlinear polynomial expansion on the input data as preprocessing. Usually, a polynomial expansion of degree \(2\) or \(3\) is chosen. For example we can take:

\[x=[x_1, x_2, x_3] \to \left[x_1^2, x_1x_2, x_1x_3, x_2^2, x_2x_3, x_3^2,x_1, x_2, x_3 \right].\]

The choice of the nonlinear expansion is important for using SFA in machine learning contexts. If it is a low dimensional expansion, it might not solve the task with high accuracy, while if the dimension is too high, it might overfit the training data, and therefore not generalize properly to test data. This technique also goes under the name of polynomial kernel.

We also need to satisfy the constraint on the average of the signal being zero and have unit variance. This is not a strong requirement, since it can be enforced beforehand, by preprocessing the dataset. This requires only linear time with respect to the dimensions of the data, and in practice consist in removing the mean and scale the components by their variance. Namely, we assume that the \(j\)-th component of the \(i\)-th vector in the dataset satisfies the condition:

\[(x_i)_j := \frac{ (\tilde{x}_i)_j - E[(\tilde{x}_i)_j] }{ \sqrt{ E [ ( ( \tilde{x}_i)_j - E[( \tilde{x}_i)_j] )^2 ] } },\]

where with \(\tilde{x}(i)\) we define a raw signal with arbitrary mean and variance, \(E[\tilde x_j(i)]\) the expected value of a single component of the vectors. This allows us to rewrite the minimization problem including the constraints of zero mean and unit variance. We can restate the definition of the delta function as a generalized eigenvalue problem:

\[\begin{equation}\label{delta} \Delta(y_j) = \frac{ w^T_j A w_j}{ w_j^T B w_j} , \end{equation}\]

where the matrix \(B\) is called the sample covariance matrix and defined as:

\[\begin{equation} \label{defB} B:= \frac{1}{n}\sum_{i \in [n]} x_i x_i^T = X^T X \end{equation}\]

and the matrix \(A\) is called the sample derivative covariance matrix and defined as:

\[\begin{equation} \label{defA} A := \frac{1}{a} \sum_{k=1}^K \sum_{\substack{i, i' \in T_k \\ i < i'}} ( x_i - x_{i'} )( x_i - x_{i'} )^T = \frac{1}{a} \sum_{k=1}^K \dot{X_k}^T \dot{X_k} := \dot{X}^T \dot{X}. \end{equation}\]

Note also, that we can approximate the matrix \(A\) by subsampling from all possible pairs \((x_i, x_{i'})\) from each class and this is indeed what happens in practice.

9.2.1.1.1 Slowly varying signals

We formalize the concept of slowly varying signals. While this won’t have any utility in the classical algorithm, it will allow us to bound nicely the runtime of the quantum algorithm, in the case when the data has ``structure’’ that can be extracted by the SFA algorithm. In general, a slow signal is a signal that change slowly over time. This concept is formalized in the context of SFA by requiring that the whitened signal can be reconstructed without too much error from the projection on a few slow feature vectors. Formally, for a given \(K\), and a set of slow feature vectors \(w_1 \dots w_{K-1}\), we define a slowly varying signal as follows.

Definition 9.2 (Slowly varying signal) Let \(X \in \mathbb{R}^{n \times d}\) and \(Y \in [K]^{n}\) be a dataset and its labels. Let the rows \(x_i\) of \(X\) have whitened representation \(z_i\). For the \(K-1\) slow feature vectors \(w_j, j \in [K]\), let \(y_i\) be the slow feature representation of \(x_i\). We say that \(X\) is \(\gamma_K\)-slow if: \[\frac{\sum_{i=0}^n \left\lVert z_i\right\rVert}{\sum_{i=0}^n \left\lVert y_i\right\rVert} \leq \gamma_{K}\]

If we use SFA for doing dimensionality reduction in the context of supervised learning, a dataset is slowly varying if all the elements in the same class are similar to each other. In this way, by projecting the original images in the subspace spanned by a small number of slow feature vectors, we can reconstruct most of the information of the original dataset. We stress that this assumption is not needed for the quantum algorithm to work, but instead it will only be useful to give guarantees on the runtime of the algorithm.

9.2.1.1.2 The SFA algorithm

The SFA algorithm basically provides a solution to the generalized eigenvalue problem \(AW=\Lambda BW\) and outputs the eigenvectors corresponding to the smallest eigenvalues. As we said we assume that the data has been normalized and polynomially expanded.

The first step of the algorithm is to whiten the data. This will reduce the problem into a normal eigenvalue problem; the second step is to perform PCA in order to find the eigenvalues and eigenvectors. We refer to (Escalante-B and Wiskott 2012) for a more complete description.

9.2.1.1.2.1 Step 1: Whitening the data

Recall that \(X \in \mathbb{R}^{n \times d}, A,B \in \mathbb{R}^{d \times d}\). We now show how to whiten the data by right multiplying with the matrix \(B^{-1/2} = [ (X^TX)]^{-1/2}\). Then, the input matrix becomes \(Z=XB^{-1/2}\) and the covariance matrix of the whitened data \(Z^TZ\) is thus the identity.

Lemma 9.9 Let $Z:=XB^{-1/2} $ be the matrix of whitened data. Then \(Z^TZ = I\).% and \(B^{-1/2}\) is symmetric.

Proof. Let \(X=U\Sigma V^T\). We defined \(B=V\Sigma^{2}V^T\). As \(Z=U\Sigma V^T(V\Sigma^{-1}V^T)=UIV\) It follows that \(Z^TZ=I\).

As in the classical algorithm, we will whiten the data by left-applying the whitening matrix \(B^{-1/2}\). We will use matrix multiplication algorithms to create a state \(|Z\rangle\) proportional to the whitened data.

9.2.1.1.2.2 Step 2: Projection in slow feature space

The second step of SFA consists of outputting the \(K-1\) ``slowest’’ eigenvectors of the derivative covariance matrix of the whitened data \(A :=\dot{Z}^T\dot{Z}\), where \(\dot{Z}\) is defined similar to \(\dot{X}\) by using the whitened samples instead of the original ones. Note that taking the derivatives of the whitened data is equal to whitening the derivatives.

Lemma 9.10 Let \(A = \dot{Z}^T\dot{Z}\). Then \(A = (B^{-1/2})^T \dot{X}^T \dot{X}B^{-1/2}\).

Proof. \[\begin{eqnarray*} A & = & \dot{Z}^T\dot{Z} = \frac{1}{a} \sum_{k=1}^K \sum_{\substack{i, i' \in T_k \\ i < i'}} (z_i - z_{i'} )( z_i - z_{i'} )^T \\ & = & (B^{-1/2})^T \frac{1}{a} \sum_{k=1}^K \sum_{\substack{i, i' \in T_k \\ i < i'}} ( x_i - x_{_i'} )(x_i - x_{i'} )^T B^{-1/2} \\ & = & (B^{-1/2})^T \dot{X}^T \dot{X} B^{-1/2} \end{eqnarray*}\]

This observation allow us to whiten the data with a quantum procedure. Recall that the matrix \(A\) is usually approximated with a small fraction of all the possible derivatives, roughly linear (and not quadratic) on the number of data points. In our case we take the number of rows of the derivative matrix to be just double the number of data points, and in the experiment we show that this does not compromise the accuracy.

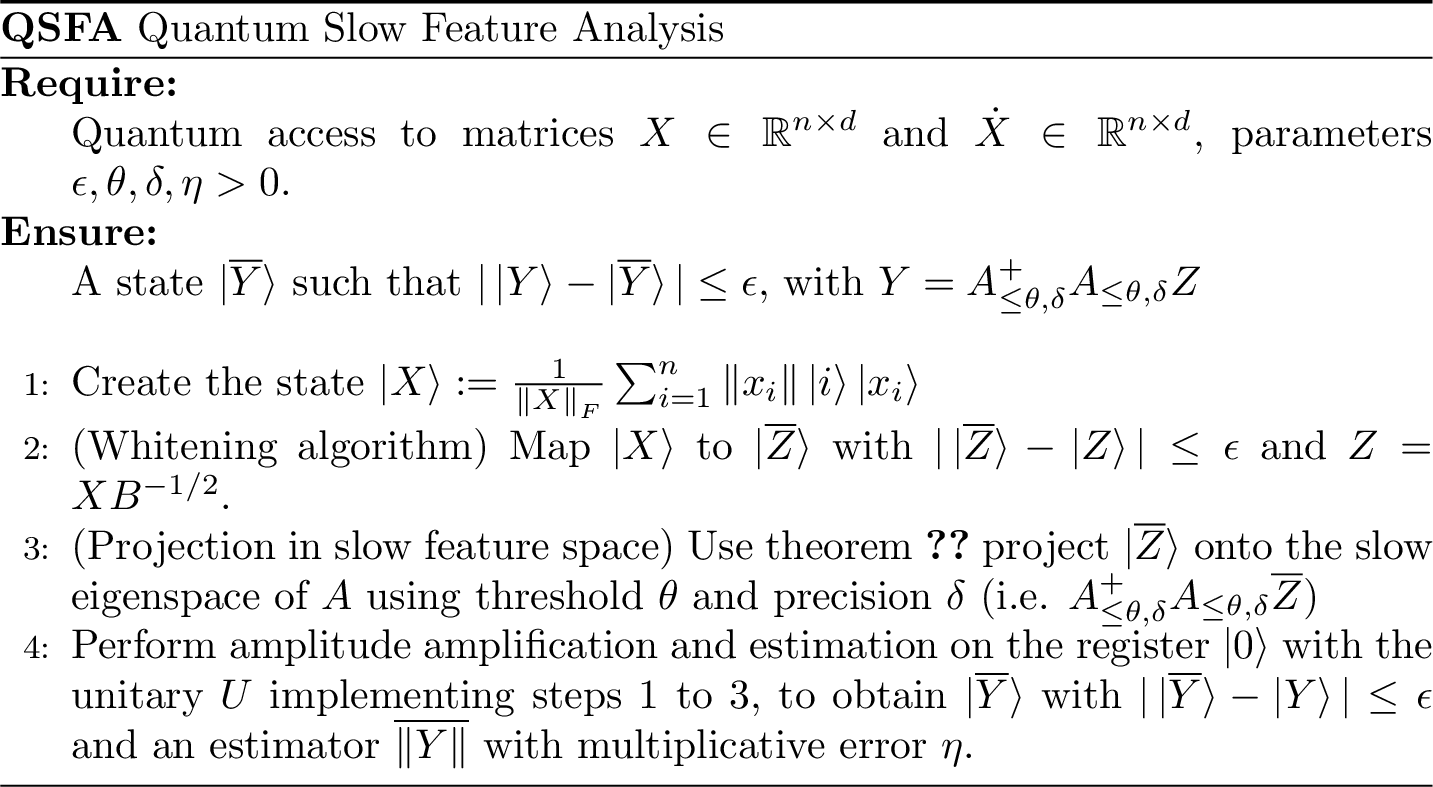

9.2.1.2 Quantum Slow Feature Analysis

We are finally ready to put forward a quantum procedure for SFA. Specifically, we want to map the input matrix \(X\) to the output matrix \(Y=XW\), and then we will see how to estimate classically the slow feature vectors \(W \in\mathbb{R}^{(K-1)\times d}\). For this, we assume to have quantum access to the matrices \(X\) and \(\dot{X}\), as in definition ??. We start by describing a circuit that approximately performs the unitary \(U_{QSFA} : |X\rangle\to |Y\rangle\) where \(|X\rangle\) is the quantum state we obtain by having quantum access to \(X\), the dataset, and \(|Y\rangle := \frac{1}{\left\lVert Y\right\rVert_F} \sum_{i=0}^n\left\lVert y_i\right\rVert|i\rangle|y_i\rangle\). As the classical algorithm, QSFA is divided in two parts. In the first step we whiten the data, i.e. we map the state \(|X\rangle\) to the state \(|Z\rangle=|XB^{-1/2}\rangle\), and in the second step we approximately project \(|Z\rangle\) onto the subspace spanned by the smallest eigenvectors of the whitened derivative covariance matrix \(A=\dot{Z}^T\dot{Z}\).

9.2.1.3 Step 1: Whitening the data

Recall that \(X = \sum_i \sigma_i u_iv_i^T \in \mathbb{R}^{n\times d},\) and \(A,B \in \mathbb{R}^{d \times d}\). We now show how to whiten the data having quantum access to the matrix \(X\). As \(B^{-1/2}\) is a symmetric matrix with eigenvectors the column singular vectors of \(X\) and eigenvalues equal to \(1/|\sigma_i|\). Using quantum linear algebra procedure, i.e. theorem 5.11, we can multiply with \(B^{-1/2}\) our state \(|X\rangle\). Thus, we have the following corollary.

Corollary 9.4 (Whitening algorithm) Assume to have quantum access to \(X = \sum_i \sigma_i u_iv_i^T \in \mathbb{R}^{n\times d}\), as in theorem ??. Let \(Z = XB^{-1/2}\) the matrix of whitened data. There exists a quantum algorithm that produces as output a state \(|\bar{Z}\rangle\) such that \(| |\bar{Z}\rangle - |Z\rangle| \leq \varepsilon\) in expected time \(\tilde{O}(\kappa(X)\mu(X) \log{1/\varepsilon}))\).

9.2.1.4 Step 2: Projection in slow feature space

The previous Corollary gives a way to build quantum access to the rows of the whitened matrix \(Z\), up to some error \(\epsilon\). Now we want to project this state onto the subspace spanned by the eigenvectors associated to the \(K-1\) ``slowest’’ eigenvectors of the whitened derivative covariance matrix \(A :=\dot{Z}^T\dot{Z}\), where \(\dot{Z}\) is the whitened derivative matrix \(\dot{Z} = \dot{X}B^{-1/2}\). Let \(\theta\) a threshold value and \(\delta\) a precision parameter, that governs the error we tolerate in the projection threshold. Recall that \(A_{\leq \theta, \delta}\) we denote a projection of the matrix \(A\) onto the vector subspace spanned by the union of the singular vectors associated to singular values that are smaller than \(\theta\) and some subset of singular vectors whose corresponding singular values are in the interval \([\theta, (1+\delta) \theta]\).

To perform the projection, we will need a threshold for the eigenvalues that will give us the subspace of the \(K-1\) slowest eigenvectors. A priori, we don’t know the appropriate threshold value, and thus it must be found experimentally through binary search since it depends on the distribution of singular values of the matrix representing the dataset. We can now describe and analyse the entire QSFA algorithm.

As in the previous section, we note that the eigenvalues of \(A_{\dot{Z}}\) are the squares of the singular values of \(\dot{Z}\), and the two matrices share the same column space: \(\dot{Z} = U\Sigma V^T\), and \(A_{\dot{Z}} = V\Sigma^2 V^T\). Claim 9.10 tells us that whitening the derivatives of the signal is equal to taking the derivatives of the whitened data. theorem 5.12 provides exactly the procedure for accessing the rows of \(\dot{Z}\), since we know how to multiply with \(\dot{X}\) and with \(B^{-1/2}\).

Theorem 9.1 (QSFA algorithm) Assume to have quantum access to \(X = \sum_i \sigma_i u_iv_i^T \in \mathbb{R}^{n\times d}\) and its derivative matrix \(\dot{X} \in \mathbb{R}^{n \log n \times d}\). Let \(\epsilon, \theta, \delta, \eta >0\). There exists a quantum algorithm that produces as output a state \(|\overline{Y}\rangle\) with \(| |\overline{Y}\rangle - |A^+_{\leq \theta, \delta}A_{\leq \theta, \delta} Z\rangle | \leq \epsilon\) in time \[\tilde{O}\left( \frac{ ( \kappa(X) + \kappa(\dot{X})) ( \mu({X})+ \mu(\dot{X}) ) }{\delta\theta} \gamma_{K-1} \right)\]

and an estimator \(\overline{\left\lVert Y\right\rVert}\) with \(| \overline{\left\lVert Y\right\rVert} - \left\lVert Y\right\rVert | \leq \eta \left\lVert Y\right\rVert\) with an additional \(1/\eta\) factor.

Proof. QSFA consists of two steps. The first step is the whitening, which can be performed in time \(\tilde{O}(\kappa(X)\mu(X)\log(1/\epsilon))\) and provide the state \(|\overline{Z}\rangle\) using Corollary 9.4. It is simple to verify that creating a state \(|Z\rangle\) of whitened data such that \(Z^TZ = I\) can be done using quantum access just to the matrix \(X\), as \(Z=XB^{-1/2}\). The second step is the projection of whitened data in the slow feature space, which is spanned by the eigenvectors of \(A=\dot{Z}^T\dot{Z}\). This matrix shares the same right eigenvectors of \(\dot{X}B^{-1/2}\), which is simple to check that we can efficiently access using the QRAM constructions of \(X\) and \(\dot{X}\). Using the algorithm for quantum linear algebra, i.e. theorem 5.12, we know that the projection (without the amplitude amplification) takes time equal to the ratio \(\mu(X) +\mu(\dot{X})\) over the threshold parameter, in other words it takes time \(\tilde{O}( \frac{(\mu({X})+ \mu(\dot{X}) }{\delta \theta})\). Finally, the amplitude amplification and estimation depends on the size of the projection of \(|\overline{Z}\rangle\) onto the slow eigenspace of \(A\), more precisely it corresponds to the factor \(O(\frac{\left\lVert\overline{Z}\right\rVert}{ \left\lVert A^+_{\leq \theta, \kappa}A_{\leq \theta, \kappa} \overline{Z} \right\rVert})\), which is roughly the same if we look at \(Z\) instead of \(\overline{Z}\). Note also that \(Z\) is the whitened data, which means that each whitened vector should look roughly the same on each direction. This implies that the ratio should be proportional to the ratio of the dimension of the whitened data over the dimension of the output signal. The final runtime of the algorithm is:

\[\tilde{O}\left( \left( \kappa(X)\mu(X)\log (1/\varepsilon) + \frac{ ( \kappa(X) + \kappa(\dot{X})) ( \mu({X})+ \mu(\dot{X}) ) }{\delta\theta} \right) \frac{\left\lVert Z\right\rVert}{ \left\lVert A^+_{\leq \theta, \delta}A_{\leq \theta, \delta} {Z} \right\rVert} \right)\] Note that the last ratio in this runtime was defined as \(\gamma_{K-1}\) in definition 9.2. From this, the runtime in the statement of the theorem follows.

Figure 9.1: Quantum algorithm for Slow Feature Analysis